Case Report Open Access

Personalized Audio Assessment and Temporal Patterns of Dementia- Related Behavioral Disturbances

Armen C Arevian*, Heather Patel and Stephen T ChenSemel Institute, University of California Los Angeles, CA, USA

- *Corresponding Author:

- Armen C Arevian

Semel Institute, University of California

Los Angeles, CA, USA

Tel: 310-794-3732

Fax: 310-794-3724

E-mail: aarevian@mednet.ucla.edu

Received date June 30, 2016; Accepted date August 02, 2016; Published date August 09, 2016

Citation: Arevian AC, Patel H, Chen ST (2016) Personalized Audio Assessment and Temporal Patterns of Dementia-Related Behavioral Disturbances. J Alzheimers Dis Parkinsonism 6:252. doi:10.4172/2161-0460.1000252

Copyright: ©2016 Arevian AC, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Visit for more related articles at Journal of Alzheimers Disease & Parkinsonism

Keywords

Vocally disruptive behaviors; Agitation; Alzheimer’s disease; Audio recording; Automated event detection; Temporal dynamics; Assessment

Introduction

Behavioral disturbances are common clinical features of many neuropsychiatric disorders, including dementia, that are challenging to assess and treat [1,2], diminishes quality of life of patients and caregivers [3] and precipitates institutionalization [4]. A key challenge in improving care and developing more effective interventions is the accurate assessment of behavioral disturbances [5], which is at present largely limited to observer-rated scales at a specific point in time. In this case report, we describe a novel method of assessing dementiarelated vocally disruptive behaviors (VDB) [6] using audio recordings and automated analysis to objectively measure VDB and their temporal patterns and response to treatment. This method of assessment may potentially be developed and implemented in care settings to more effectively measure specific types of behavioral disturbances and guide treatment for individual patients.

Case Report

An 83 year old man with history of dementia as well as major depressive disorder was admitted to a university-based inpatient geriatric psychiatry unit for progressive VDB manifested by repeated loud grunting. Mini Mental State Exam score on admission was 21/30. Outpatient medications included citalopram 20 mg daily, lithium 450 mg nightly, olanzapine 15 mg nightly and buspirone 30 mg twice daily. During hospitalization, he received 12 electroconvulsive therapy (ECT) treatments and olanzapine 5 mg three times daily, mirtazapine 30 mg nightly and trazodone 50 mg nightly. He was discharged on these medications as well as valproate 125 mg three times daily.

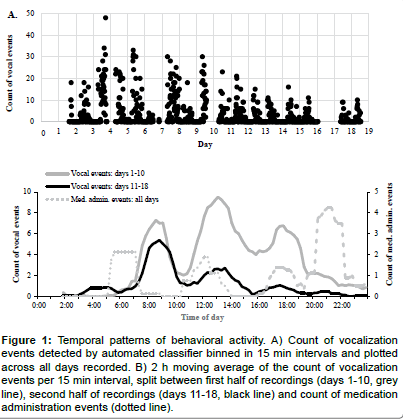

A key barrier identified in caring for this patient was difficulty in both assessing symptom burden and response to treatment interventions (such as ECT or medication administration), making selection of treatments and dosing challenging. To address this, we placed an inexpensive, digital audio recorder (Sony ICD-PX820) in the patient’s breast pocket or nightstand to capture 19,605 min of audio over 18 days (76% of the total time). We then used off-the-shelf software (Song Scope, Wildlife Acoustics), designed to detect bird songs in nature [7], to train an automated audio classifier that identified a total of 3,179 behavioral events from the recordings. We assessed the accuracy of the algorithm by comparing the algorithm’s predicted count to our manual count of VDB events in 22 fifteen minute samples, resulting in a sensitivity (true positive rate) of 92% and positive predictive value of 85%. We were then able to visualize the pattern of behaviors throughout the recording period (Figure 1A), showing decreased VDB over time. Interestingly, we observed a three-peak intraday pattern that was preserved throughout the period but with reduction in amplitude in the second half of recordings (Figure 1B). This temporal pattern was similar to the pattern of medication administration events for antipsychotic or sedating medications (Figure 1B, dotted line).

Figure 1: Temporal patterns of behavioral activity. A) Count of vocalization events detected by automated classifier binned in 15 min intervals and plotted across all days recorded. B) 2 h moving average of the count of vocalization events per 15 min interval, split between first half of recordings (days 1-10, grey line), second half of recordings (days 11-18, black line) and count of medication administration events (dotted line).

Discussion

A promising new direction for the assessment and treatment of behavioral disturbances is real-time sensing in conjunction with automated detection of behavioral events [8], including signals derived from audio [9]. In this case report, we demonstrate that audio recording and automated analysis using off-the-shelf components enabled an objective characterization of this patient’s VDB over an extended period of time and enabled us to explore relationships of this behavior to treatment interventions and time of day. Interpretation of these relationships is limited by the presence of multiple factors that could influence them, including the use of concomitant medications, ECT, environmental cues and circadian rhythms [10]. Although case reports have limitations, our findings suggest the potential for the clinical use of automated audio assessments and highlight the need for systematic controlled studies to investigate their utility. Future studies may explore the use of these automated assessments, possibly in combination with other biomarkers, to better understand disease pathology, the temporal relation between behaviors, treatment interventions and changes in biological state, as well as to inform advances in precision medicine approaches in the care of dementia-related behaviors.

References

- De Deyn PP, Wirshing WC (2001) Scales to assess efficacy and safety of pharmacologic agents in the treatment of behavioral and psychological symptoms of dementia. J ClinPsychiatry 62 Suppl 21:19-22.

- Daly JM, Bay CP, Levy BT, Carnahan RM (2015) Caring for people with dementia and challenging behaviors in nursing homes: A needs assessment geriatric nursing. Geriatric Nursing36: 182-191.

- Khoo SA, Chen TY, Ang YH, Yap P (2013) The impact of neuropsychiatric symptoms on caregiver distress and quality of life in persons with dementia in an Asian tertiary hospital memory clinic. IntPsychogeriatr 25:1991-1999.

- Okura T, Plassman BL, Steffens, Llewellyn DJ, Potter GG, et al. (2011) Neuropsychiatric symptoms and the risk of institutionalization and death: the aging, demographics, and memory study. J Am GeriatrSoc 59:473-481.

- Ashley EA (2015) The Precision Medicine Initiative: a new national effort. JAMA 313: 2119-2122.

- von Gunten A, Alnawaqil AM, Abderhalden C, Needham I, Schupbach B (2008) Vocally disruptive behavior in the elderly: a systematic review. IntPsychogeriatr 20:653-672.

- Holmes SB, McIlwrick KA, Venier LA (2014) Using automated sound recording and analysis to detect bird species-at-risk in southwestern Ontario woodlands. WildlSoc Bull 38: 591-598.

- Narayanan S, Georgiou PG (2013) Behavioral Signal Processing: Deriving Human Behavioral Informatics From Speech and Language. Proc IEEEInstElectr Electron Eng 101:1203-1233.

- Cohen-Mansfield J, Werner P, Hammerschmidt K, Newman JD (2003) Acoustic Properties of Vocally Disruptive Behaviors in the Nursing Home. Gerontology 49:161-167.

- Karatsoreos IN (2014) Links between Circadian Rhythms and Psychiatric Disease. Front BehavNeurosci 8:162.

Relevant Topics

- Advanced Parkinson Treatment

- Advances in Alzheimers Therapy

- Alzheimers Medicine

- Alzheimers Products & Market Analysis

- Alzheimers Symptoms

- Degenerative Disorders

- Diagnostic Alzheimer

- Parkinson

- Parkinsonism Diagnosis

- Parkinsonism Gene Therapy

- Parkinsonism Stages and Treatment

- Stem cell Treatment Parkinson

Recommended Journals

Article Tools

Article Usage

- Total views: 12827

- [From(publication date):

August-2016 - Jul 11, 2025] - Breakdown by view type

- HTML page views : 11881

- PDF downloads : 946