Research Article Open Access

Environmental Emotional Sounds in AD Recognition of Environment al Emotional Sounds in Alzheimers Disease

Domenico Passafiume*, Nicoletta Caputi, Lucia Serenella De Federicis, Marta Colantonio and Dina Di Giacomo

Department of Clinical Medicine, Public Health, Life and Environment Sciences, University of L’Aquila, Italy

- Corresponding Author:

- Domenico Passafiume

Department of Clinical Medicine

Public Health, Life and Environment Sciences (MESVA)

University of L’Aquila, Italy

Tel: +390862434694

E-mail: domenico.passafiume@cc.univaq.it

Received date: November 02, 2015; Accepted date: March 01, 2016; Published date: March 08,2016

Citation: Passafiume D, Caputi N, De Federicis LS, Colantonio M, Di Giacomo D (2016) Recognition of Environmental Emotional Sounds in Alzheimer’s Disease. J Alzheimers Dis Parkinsonism 6:217. doi:10.4172/2161-0460.1000217

Copyright: © 2016 Passafiume D, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Visit for more related articles at Journal of Alzheimers Disease & Parkinsonism

Abstract

Objective: Patients affected by Alzheimer’s disease show deficits in emotion processing and inappropriate social behaviors during emotional situations were clinically observed. Aim of our study was to explore the ability of patients with Alzheimer’s disease in ascribing emotional meaning to environmental or human emotional sounds, to understand if environmental sounds are impaired in their meaning or in their emotional attribute.

Methods: Thirty participants were included in the study. The sample comprised two groups: 1) 15 patients with a diagnosis of probable Alzheimer’s disease; 2) 15 healthy comparison matched with the experimental group.

Participants were submitted to a neuropsychological evaluation that included standardized (Mini-Mental State Examination, Boston Naming Test and the Token Test) and experimental tasks. The experimental battery was composed of four tasks: the Noise Recognition Task, the Emotion Naming Task, the Emotion Discrimination Task and the Sound and Emotion Association Task. These tasks were chosen to mirror ecologic situations, in which patients have to infer feelings elicited by sounds.

Results: Analysis of variance showed that patients affected by Alzheimer’s disease had significantly worse performance than healthy comparison subjects (p <.001) in the experimental battery.

Conclusion: The findings suggested, as expected, that Alzheimer’s Disease patients was less efficient than healthy comparison subjects in processing emotion, even if the two groups showed a similar trend. When patients have to associate visual and auditory stimuli, they have more difficulties in establishing among facial expressions the one to which the sound belong, rather than in identifying the meaning of the facial expressions or of the sounds.

Keywords

Emotion processing; Alzheimer’s disease; Environmental sounds; Emotional sounds

Introduction

Alzheimer’s Disease (AD) is a neurodegenerative disorder associated with a gradual decline in brain functioning [1], where memory is usually the first deficit. The decline of brain functions eventually affects all the cognitive [1,2] and affective domains within the brain.

Emotion processing in AD was widely studied. It refers to the cognitive ability of recognizing emotional states in other people and/ or communicating their own feelings. The ability of processing emotions is fundamental for an appropriate social interaction and may cause distress in both patients and caregivers. It can lead to a poor quality of life due to reducing interpersonal communication [3,4] and can influence social behavior in patients [4].

It was clearly underlined that patients affected by AD showed deficits in emotion processing, an ability that resulting from neural areas, such as hippocampus, amygdala and posterior association areas, often impaired since the early stages of the disease [5,6]. In fact, it was recognized that facial emotion processing could be impaired in Mild Cognitive Impairment (MCI), even before the more marked cognitive deficits [7].

The major part of the studies has utilized tasks, such as naming tasks, based on visual stimuli and particularly on facial expressions: these stimuli are static and are usually presented briefly and in unisensory modality. These studies have demonstrated that AD patients had more difficulties in identifying emotional facial expressions then healthy comparison subjects. Behavioral studies have provided also evidence of deficits among individuals with AD in recognizing facial emotions [8,9], specifically happiness, sadness, and fear [10].

The ability of recognizing different facial expressions in AD decreases with the progression of dementia and could be related to the degeneration of the structures implicated in emotional processing system [11].

Rosen et al. [12] observed that impaired recognition of negative emotions in patients with dementia (including patients with AD, MCI, or frontotemporal lobar degeneration) was associated with right temporal gyrus atrophy.

On the other hand, the ability of processing emotional auditory stimuli was largely under investigated, even if multiple sensory channels occur in daily life while processing emotional situations or objects.

In healthy subjects several studies underlined that both visual and auditory emotion processing share similar neural areas, since the amygdala receives via thalamus input from all senses [13-16]. Moreover, emotional stimuli presented through different modalities seems to activate the same network core: visual, auditory and olfactory emotional stimuli activate the orbitofrontal cortex, the temporal pole, and the superior frontal gyrus in the left hemisphere [17]; moreover, it has been evidenced that the left amygdala is sensitive to valence of pictures and negative sounds, and that the right amygdala is sensitive to valence of positive pictures [18]. The orbitofrontal cortex and the superior temporal gyrus, the amygdala, the anterior insula showed increased activation during the processing of both emotional and social stimuli independent of the sensory modality (visual or auditory stimuli) [19].

There are strong evidences that emotion processing in one modality can influence emotion processing in another one: for example emotion processing is faster for multimodal congruent stimuli (e.g. pleasant visual complex scene or face and pleasant sound) then for the incongruent ones [20].

Regarding Alzheimer’s Disease, even if the major part of the studies used facial stimuli, emotion processing was assessed also through auditory stimuli such as human voice recording for prosody and audiotaped or video-taped stories [21].

Furthermore, it was found that using music with an emotional meaning could facilitate patients with Alzheimer’s disease to recall autobiographic events in memory [22].

Moreover, at the best of our knowledge, we found only one study [23] which investigated the ability of recognize emotion in Alzheimer’s disease by using also emotional environmental sounds (e.g. laughing, crying): The authors did not find a significant difference between patients affected by Alzheimer’s Disease and healthy comparison subjects in this task, suggesting that emotion processing ability varied across different type of tasks.

Although in literature, as reported above, there are several evidence that in AD patients emotion processing is impaired when assessed by naming and discrimination tasks using visual stimuli, the emotion processing through auditory stimuli is largely under investigated

Starting from the clinical evidence that patients affected by Alzheimer’s Disease often show inappropriate social behaviors during emotional situations, aim of our study was to explore the ability of AD patients in ascribing emotional meaning to environmental or human emotional sounds, confronted to healthy elderly participants. In particular, we evaluated the ability to associate an environmental sound to the appropriate emotional facial expression and how this ability is affected by the ability of naming and discrimination of both facial expressions and environmental sounds, to understand if environmental sounds are impaired in their meaning or in their emotional attribute. The hypothesis is that AD patients can show impairments in auditory emotion processing, similar of that showed in the visual modality, since the neural substrates in the two modalities are similar, as reported in healthy subjects. This approach represents a novelty in this field and could highlight the features connected to emotion deterioration in Alzheimer’s patients.

Methods

Subjects and data collection

Thirty participants, all native Italian speakers, were included in the study. The sample was divided into two groups: 1) 15 patients (6 male and 9 female) with a diagnosis of probable Alzheimer’s disease [AD group] (age: M=77.2, SD=4.76; education: M=4.6, SD=1.14); 2) 15 healthy comparison matched with the other group by age, sex and educational level (6 male and 9 female) (age: M=72.66, SD=7.2; education: M=5.91, SD=4.12) (HC group).

Diagnoses of AD patients were made by neurologists independent from the study, according to the National Institute of Neurological Disorders and Stroke–Alzheimer’s Disease and Related Disorders (NINCDS–ADRDA) criteria [24]. Only patients with sufficient comprehension were included in the study. The patients had a score between 17 and 23 at the Mini-Mental State Examination [25].

The healthy comparison subjects recruited had a negative anamnesis for alcohol or drug abuse, neurological and psychiatric diseases. Informed consent was obtained from all participants or, where appropriate, from their caregiver. An ethics committee approved the study.

Materials

Participants were submitted to a neuropsychological evaluation that included standardized tests and experimental tasks.

The standardized tests were the Mini-Mental State Examination [25], the Boston Naming Test [26] and the Token Test [27].

This battery was chosen to verify if patients had deficits in naming and/or in comprehension that could explain the performances at the experimental tasks.

The standardized tasks were also used to verify that the healthy comparison subjects didn’t have any neuropsychological impairment (Table 1).

| Group | n. | MMSE | TT | BNT |

|---|---|---|---|---|

| AD | 15 | 19.15 (3.42) | 26.8 (2.30) | 23.6 (5.02) |

| HC | 15 | 27.90(1.93) | 34.5 (1.23) | 39.66 (7.30) |

| ANOVA Between subjects effects | F (1,28)=73.94 p<0.001 | F(1,28)=131.60 p<0.001 | F(1,28)=49.06 p<0.001 | |

(Mean ± SD) correct answers for neuropsychological standardized tasks: Mini- Mental State Examination (MMSE), Boston Naming Test (BNT), Token Test (TT). Between Subjects effects for ANOVA are reported in the last row.

Table 1: Neuropsychological battery.

The experimental battery was composed of four tasks: the Noise Recognition Task, the Emotion Naming Task, the Emotion Discrimination Task and the Sound and Emotion Association Task.

A pilot study was conducted to investigate the feasibility and effectiveness of the stimuli chosen for the experimental tasks.

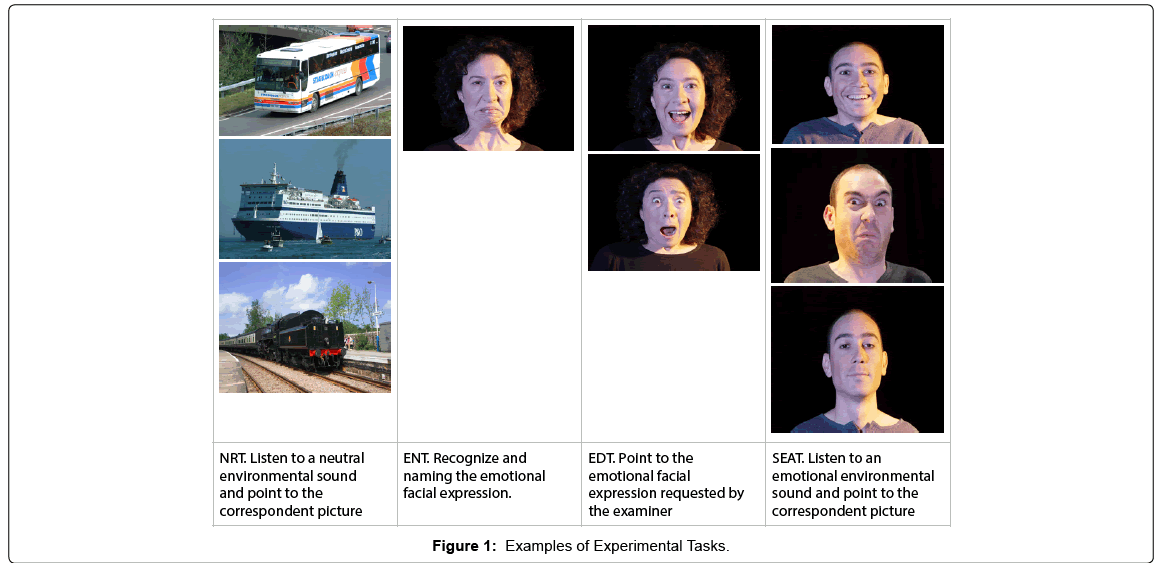

The Noise Recognition Task (NRT) consisted of 10 neutral environmental sounds lasting 5 seconds each: Participants had to recognized environmental sounds and choose, among three alternative photographs representing three objects (e.g. train, ship, bus), the one that corresponded with the sound listened through the earphones (e.g. ship sound).

The Emotion Naming Task (ENT) (14 items) consisted in photographs of six basic facial expressions (happiness, surprise, fear, disgust, sadness and anger). On this task, participants were required to recognized and naming each facial expression of emotion. Stimuli were presented one by one.

The Emotion Discrimination Task (EDT) consisted of 14 items: Two photographs of facial expression representing two different emotions were shown simultaneously to the participants. Participants were asked to point the photograph that represented the emotion requested from the examiner (e.g. “Point to happiness”).

The Sound and Emotion Association Task (SEAT) consisted of 28 items: The subject’s task was to choose, among three alternative photographs representing emotional facial expressions, the one that evocated the emotion associated with the sound listened (e.g. applause for a win with surprised face; roar of a lion with frightened face, etc.).

The tasks were presented in a pseudo-random sequence, in order to avoid learning effects.

For each task was calculated the number of correct answers (Figure 1).

Results

Statistical analysis

The data were evaluated by using Statistica 7 [28] for Windows. Analyses of variance were used to evaluate the ability of AD patients in ascribing the emotional significance to environmental or human emotional sounds, confronted to healthy elderly participants.

Both scores obtained in standardized tests and experimental tasks were transformed in z scores of correct responses, based on mean and standard deviation of the whole sample, to compare the tasks that were composed of different numbers of items.

Results were considered statistically significant at the p<0.05 level.

Standardized tasks

Performance of the groups on the standardized tasks was compared using a 2 (groups) × 3 (experimental tasks) multivariate analysis of variance (ANOVA).

Table 1 show mean and standard deviation obtained from the participants in the standardized battery.

The analysis revealed a significant multivariate effect on groups F(3,26)= 59.21, p<.0001 and significant between subjects effects on Mini-Mental State Examination (F(1,28)=73.94, p<0.001), Token Test (F(1,28)=131.60, p<0.001) and Boston Naming Test (F(1,28)=49.06, p<0.001).

Experimental tasks

Performance of the groups on the experimental tasks was compared using a 2 (groups) × 4 (experimental tasks) analysis of variance (ANOVA) for repeated measures.

Table 2 show mean and standard deviation obtained from the participants in the experimental battery.

| Group | n. | NRT | ENT | EDT | SEAT |

|---|---|---|---|---|---|

| AD | 15 | 6.1 (0.70) | 7.5 (2.3) | 11.0 (2.1) | 13.21 (2.92) |

| HC | 15 | 10.8 (1.05) | 12.4 (1.7) | 13,8 (0.38) | 22.8 (1.90) |

| ANOVA - Main Effect on groups: F(1,28)= 227.55, p<0.001 | |||||

(Mean ± SD) correct answers for neuropsychological experimental tasks: Noise Recognition Task (NRT); The Emotion Naming Task (ENT); Emotion Discrimination Task (EDT); Sound and Emotion Association Task (SEAT). Main effect on Groups in ANOVA are reported in the last row.

Table 2: Experimental tasks to assess emotion processing.

The analysis revealed a main effect on groups F(1,28)= 227.55, p<0.001.

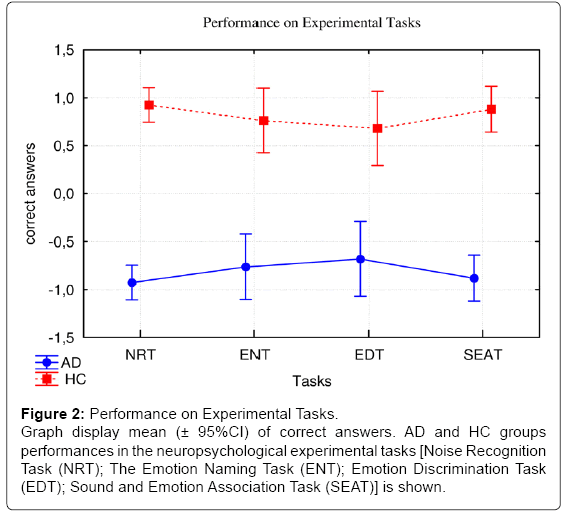

However, no significant differences were evidenced between the experimental tasks (F(3,84)=0.00; p>0.05). The interaction effect between groups and tasks was also not significant (F(3,84)=1.21; p>0.05) ( Figure 2).

Figure 2: Performance on Experimental Tasks.

Graph display mean (± 95%CI) of correct answers. AD and HC groups

performances in the neuropsychological experimental tasks [Noise Recognition

Task (NRT); The Emotion Naming Task (ENT); Emotion Discrimination Task

(EDT); Sound and Emotion Association Task (SEAT)] is shown.

To determine whether the main effect between groups was due to the confounding influence of standardized tasks score, we inserted these variables as covariates in the analysis. The analysis showed that the effect of MMSE (F(1,27)=0.48, p>0.05) and Token Test (F(1,27)=0.15,p>0.05) as covariates were not significant. On the other hand, we found a significant effect of Boston Naming Test due to covariance (F(1,27)=6.80, p<0.05), but the analysis showed also in this case a main effect on groups (F(1,27)=145.72, p<0.001).

Association between sounds and emotions

A stepwise multiple regression (forward method) was conducted to evaluate whether the abilities to recognize neutral environmental sounds, to naming facial expressions and to discriminate among different facial expressions were necessary to predict performance on Sound and Emotion Association Task. At step 1 of the analysis NRT score entered into the regression equation and was significantly related to SEAT scores (F (1,28) = 63.66, p<0.001). The multiple correlation coefficient was 0.83, indicating approximately 68.4% of the variance of the SEAT scores could be accounted for by NRT scores. ENT scores did not enter into the equation at step 1 of the analysis (t = 0.62, p>0.05).

At step 2 of the analysis both NRT and EDT scores entered into the regression equation and were significantly related to SEAT scores (F (2,27) = 97.77, p<0.001). The multiple correlation coefficients were 0.57 for NRT scores and 0.50 for EDT scores, indicating approximately 87.9% of the variance of the SEAT scores could be accounted for by NRT and EDT scores (18.4% more compared to the first model). ENT scores did not enter into the equation at step 2 of the analysis (t = 0-0.52, p>0.05).

Each of the scores are significantly correlated with the criterion, indicating that those with lower scores in neutral environmental noise recognition and emotional discrimination tasks tend to have lower performance in associating emotional environmental sounds with the appropriate emotional facial expression.

Table 3 summarizes the descriptive statistics and analysis results.

| Variables | Pearson Correlations |

|---|---|

| SEAT | |

| NRT | 0.83* |

| EDT | 0.80* |

| ENT | 0.69* |

| Regression Analysis | |

| Model1 F(1,28)=63.66, p<0.001 | Model2 F(2,27)=97.77, p<0.001 |

| NRT: b=0.83; t=7.97, p<0.001 | NRT: b=0.57; t=7.38, p<0.001 EDT: b=0.50; t=6.40, p<0.001 |

| Excluded variables | Excluded variables |

| ENT: t=0.62, p>0.05 | ENT: t=0.60, p>0.05 |

*p<0.001

Person correlations and Regression Analysis between Noise Recognition Task (NRT), Emotion Naming Task (ENT), Emotion Discrimination Task (EDT) and Sound and Emotion Association Task (SEAT).

Table 3: Results of correlations and regression analysis.

Discussion and Conclusion

Starting from the clinical evidence that patients affected by Alzheimer’s Disease often show inappropriate social behaviors during emotional situations, in this study we explored the ability of AD patients in ascribing emotional meaning to environmental or human emotional sounds, confronted to healthy elderly participants.

We considered different features of emotion processing; particularly the ability of naming emotional facial expressions, the ability of discriminating among different emotional facial expressions, and the ability of recognizing environmental sounds.

We evaluated also the ability to associate an environmental sound to the appropriate emotional facial expression and explored how naming and discrimination of both facial expressions and environmental sounds affect this ability.

We compared patients affected by Alzheimer’s Disease and healthy comparison older adults, to determine the possibility of identifying factors involved in the inappropriate social behaviors observed during emotional situations and to understand if environmental sounds are impaired in their meaning or in their emotional attribute.

The findings suggested, as expected, that AD group was less efficient than HC group in processing emotion. AD group performance was impaired in both naming and recognizing emotional facial expressions. Moreover, they had difficulties in identifying environmental sounds and in associating emotional sounds with facial expressions that communicate different emotions. On the other hand, HC group were equally efficient in all the tasks.

Our results suggest that the performance of patients in associating visual and auditory stimuli, is explained mainly by the difficulty in establishing among facial expressions the one to which the sound belong (e.g. laughing with smiley person) (discrimination ability), rather than in identifying the meaning of the facial expressions (naming ability).

Koff et al. [23], who used nonverbal sounds to investigate emotion processing in Alzheimer’s disease, did not find a significant difference between AD group and healthy elderly participants.

This discrepancy is probably due to the differences between our tasks, that require associating an environmental sound to a facial expression, and Koff et al. task, that use only human vocalization and require pointing to a line drawing of a face depicting the emotion associated to the sound in an array of six faces.

Probably our task is more difficult because include not only human vocalizations but also a wide range of different natural and artificial environmental sounds, to whom patients have to infer feelings that are associated.

In conclusion, our results suggest that the deterioration of emotion processing in dementia of the Alzheimer type show a similar trend in visual and auditory modalities.

Further research needs to identify more sensitive instruments to clarify the role of environmental cues in detecting emotions to simulate more ecologic situations.

Moreover, it is important to clarify whether emotion association ability could be a strong diagnostic indicator to detect semantic deficits in the earliest phases of disease and compare its sensitivity relative to neuropsychological tests that are generally used for clinical assessment.

These findings are quite interesting, but the present study have some limitations, which have to be noted. Firstly, the sample size was relatively small, which is a problem for the statistical power of the outcomes, but similar to other studies on the field. Moreover, the number of subjects is not sufficient in order to distinguish the sample into subgroups on the basis of the severity of the disease. Secondly, we didn’t have any data about the presence of visual and auditory agnosia in these patients; further research needs to clarify whether there is a correlation between agnosia and emotional associative impairment in Alzheimer’s disease.

Future investigations may also be directed towards examining the benefit of using emotional associative tasks in basic neuropsychological assessment and in using auditory stimuli as cues in the rehabilitation of the emotion processing.

Apart from the above limitations, this study also has a strength compared to the previous ones, and this is the use of a more ecologic task based on the assessments of environmental sounds, which reflect a more natural context.

Tasks based on facial expressions naming, in fact, mirror inadequately the context of the natural environment.

Our results may have an important impact in clinical environment and, daily, in patient’s management, since the use of nonverbal tools can be helpful as alternative or integrative communication. Using body movements, gestures and prosody while communicate with AD patients can help them to correctly identify and interpret emotional situations. Furthermore, a positive attitude in the relationship between clinicians or caregiver and patients can prevent negative behaviors.

The early detection of emotion recognition difficulties is therefore important to establish appropriate interventions, given the significant personal impact and the societal costs.

References

- Buckner RL (2004) Memory and executive function in aging and AD: multiple factors that cause decline and reserve factors that compensate. Neuron 44: 195-208.

- Bäckman L, Jones S, Berger AK, Laukka EJ, Small BJ (2004) Multiple cognitive deficits during the transition to Alzheimer's disease. J Intern Med 256: 195-204.

- Phillips LJ, Reid-Arndt SA, Pak Y (2010) Effects of a creative expression intervention on emotions, communication, and quality of life in persons with dementia. Nurs Res 59: 417-425.

- Shimokawa A, Yatomi N, Anamizu S, Torii S, Isono H, et al. (2001) Influence of deteriorating ability of emotional comprehension on interpersonal behavior in Alzheimer-type dementia. Brain Cogn 47: 423-433.

- Kemper TL (1994) Neuroanatomical and neuropathological changes during aging and in dementia. In ML Albert and JEKnoepfel (Eds.), Clinical neurology of aging (2nd ed., pp. 3-67). New York: Oxford University Press.

- Passafiume D, GiacomoD (2006)The Alzheimer's dementia. Guide intervention of cognitive and behavioral stimulation, FrancoAngeli.

- Teng E, Lu PH, Cummings JL (2007) Deficits in facial emotion processing in mild cognitive impairment. Dement GeriatrCognDisord 23: 271-279.

- Guaita A, Malnati M, Vaccaro R, Pezzati R, Marcionetti J, et al. (2009) Impaired facial emotion recognition and preserved reactivity to facial expressions in people with severe dementia. Arch GerontolGeriatr 49 Suppl 1: 135-146.

- McLellan T, Johnston L, Dalrymple-Alford J, Porter R (2008)The recognition of facial expressions of emotion in Alzheimer’s disease: a review of findings. ActaNeuropsychiatrica20: 236-250.

- Kohler CG, Anselmo-Gallagher G, Bilker W, Karlawish J, Gur RE, et al. (2005) Emotion-discrimination deficits in mild Alzheimer disease. Am J Geriatr Psychiatry 13: 926-933.

- Lavenu I, Pasquier F, Lebert F, Petit H, Van der Linden M (1999) Perception of emotion in frontotemporal dementia and Alzheimer disease. Alzheimer Dis AssocDisord 13: 96-101.

- Rosen HJ, Wilson MR, Schauer GF, Allison S, Gorno-Tempini ML, et al. (2006) Neuroanatomical correlates of impaired recognition of emotion in dementia. Neuropsychologia 44: 365-373.

- Phan KL, Wager T, Taylor SF, Liberzon I (2002) Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. Neuroimage 16: 331-348.

- Klinge C, Röder B, Büchel C (2010) Increased amygdala activation to emotional auditory stimuli in the blind. Brain 133: 1729-1736.

- Gottfried JA, O'Doherty J, Dolan RJ (2002) Appetitive and aversive olfactory learning in humans studied using event-related functional magnetic resonance imaging. The J Neurosci 22: 10829-10837.

- O'Doherty J, Rolls ET, Francis S, Bowtell R, McGlone F (2001) Representation of pleasant and aversive taste in the human brain. J Neurophysiol 85: 1315-1321.

- Royet JP, Zald D, Versace R, Costes N, Lavenne F, et al. (2000) Emotional responses to pleasant and unpleasant olfactory, visual, and auditory stimuli: a positron emission tomography study. JNeurosci 20: 7752-7759.

- Anders S, Eippert F, Weiskopf N, Veit R (2008) The human amygdala is sensitive to the valence of pictures and sounds irrespective of arousal: an fMRI study. SocCogn Affect Neurosci 3: 233-243.

- Scharpf KR, Wendt J, Lotze M, Hamm AO (2010)The brain's relevance detection network operates independently of stimulus modality. Behav Brain Res210: 16-23.

- Gerdes AB, Wieser MJ, Alpers GW (2014) Emotional pictures and sounds: a review of multimodal interactions of emotion cues in multiple domains. Front Psychol 5: 1351.

- Klein-Koerkamp Y, Beaudoin M, Baciu M, Hot P (2012) Emotional decoding abilities in Alzheimer's disease: a meta-analysis. J Alzheimers Dis 32: 109-125.

- MeilánGarcía JJ, Iodice R, Carro J, Sánchez JA, Palmero F, et al. (2012) Improvement of autobiographic memory recovery by means of sad music in Alzheimer's Disease type dementia. Aging ClinExp Res 24: 227-232.

- Koff E, Zaitchik D, Montepare J, Albert MS (1999) Emotion processing in the visual and auditory domains by patients with Alzheimer's disease. J IntNeuropsycholSoc 5: 32-40.

- McKhann GM, Knopman DS, Chertkow H, Hyman BT, Jack CR, et al. (201) The diagnosis of dementia due to Alzheimer’s disease: Recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimers Dement 7: 263-269.

- Folstein MF, Folstein SE, McHugh PR (1975) “Mini-mental state”: a practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res 12: 189-198.

- Kaplan EF, Goodglass H, Weintraub S (1983) The Boston naming test(2ndEd.) Philadelphia: Lea &Febiger.

- De Renzi E, Vignolo LA (1962) The Token Test: A sensitive test to detect receptive disturbances in aphasics. Brain 85: 665-678.

- Statsoft (2006)Statistica, Statsoft Italia s.r.l.

Relevant Topics

- Advanced Parkinson Treatment

- Advances in Alzheimers Therapy

- Alzheimers Medicine

- Alzheimers Products & Market Analysis

- Alzheimers Symptoms

- Degenerative Disorders

- Diagnostic Alzheimer

- Parkinson

- Parkinsonism Diagnosis

- Parkinsonism Gene Therapy

- Parkinsonism Stages and Treatment

- Stem cell Treatment Parkinson

Recommended Journals

Article Tools

Article Usage

- Total views: 10502

- [From(publication date):

March-2016 - Jul 02, 2025] - Breakdown by view type

- HTML page views : 9646

- PDF downloads : 856