Editorial Open Access

Compressive Sensing: Real-time Data Acquisition and Analysis for Biosensors and Biomedical Instrumentation

Janet M. Roveda and Linda S. Powers*

Department of Electrical and Computer Engineering, University of Arizona, USA

- Corresponding Author:

- Powers LS

Department of Electrical and Computer Engineering

University of Arizona, USA

Tel: +1 520-621-2211

E-mail: lspowers@email.arizona.edu

Received date: July 27, 2015; Accepted date: July 29, 2015; Published date: July 31, 2015

Citation: Roveda JM, Powers LS (2015) Compressive Sensing: Real-time Data Acquisition and Analysis for Biosensors and Biomedical Instrumentation. Biosens J 4:e105. doi:10.4172/2090-4967.1000e105

Copyright: © 2015 Roveda JM, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Visit for more related articles at Biosensors Journal

Editorial

The rapid growth of sensors for a vast number of applications have required that CPU and processors be applied as part of the data acquisition systems for a number of applications including communication, position tracking, health care monitoring, environmental changes, and transportation. In addition, the advances in nanometer electronic systems, compressive sensing (CS) [1-11] based information processing, and stream computing technologies provide great potential in creating novel hardware/software platforms and having fast data acquisition capability. Driven by these new technology developments, it is possible to develop a high speed “Adaptive Design for Information” (ADI) system that leverages the advantages of featurebased data compression, low power nanometer CMOS technology, and stream computing for biomedical instrumentation.

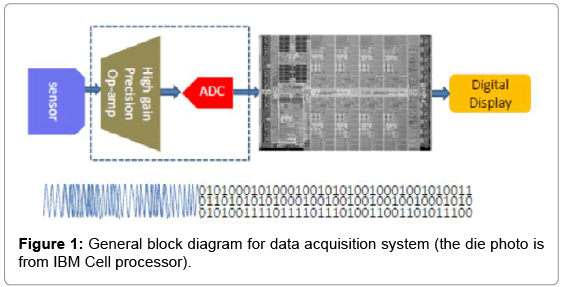

Figure 1 is a diagram for a typical data acquisition system for biomedical instrumentation. It has a sensor with analog mixed signal front end and a stream processor. The performance of these two components is very different. Most analog front ends consume 2/3 of the total chip area. While the power consumption is in the μW to mW range, the ability of the analog front end to sample and process data is a lot slower than digital processors. For example, a typical 24-bit Texas Instruments A/D converter [12] is capable of 125k sps (sample per second) which leads to 3 Mbps (megabits per second) data processing speed. With 197 Gb/s of cell processor SPU, the analog front end is several orders of magnitude slower. This means that with the current stream processor capability, we can consider real-time control for the analog front end to obtain USEFUL samples. The term “useful samples” refers to the most important information embedded in the samples. It is well known that most of today’s data acquisition systems discard a lot of data right before it is transmitted. For example, we use JPEG to reduce the data amount right before transmission to avoid lengthy communication times. In this article, we present an architecture that is data/information oriented which allows us to reduce the amount of data from the very beginning.

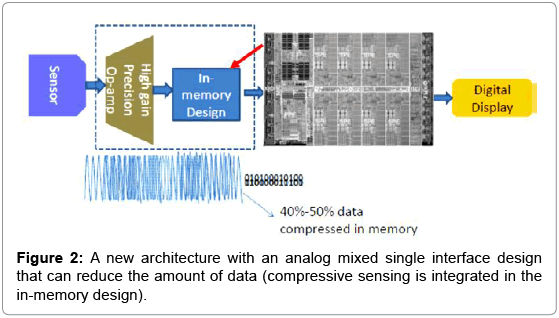

Figure 2 demonstrates such a new architecture. Note that the data is reduced at the analog front end, thus the stream processor receives only a fraction of the total amount of original data. With a reduced amount of data, less energy will be consumed in “data movement”, “driving long wires”, “accessing arrays” and “control overhead” in the stream processors. The key technology used here is compressive sensing.

To illustrate how compressive sensing can contribute to fast data acquisition, let us begin with a brief review of compressive sensing theory [1-11]. An example image can be presented as an NxM matrix Х. By using a random selection matrix Ф, we can generate a new У matrix :

Because m<< N, we reduce the total data amount. Different from JPEG and other nonlinear compression algorithms, compressive sensing linearly reduces data and preserves key features without much distortion. This is the key reason why compressive sensing can be applied to the front end of a data acquisition system instead of right before data transmission. One such example is a “single pixel camera” [13]. The camera performs random selection Ф on the sampled object. Thus, less data amount will be generated by the camera and subsequently enter the following data acquisition system. Depending on the sparsity of sampled data, the average data reduction resulting from compressive sensing is about 50%. If we use joule per bit as energy estimation, this indicates that this compression algorithm may lead to a total energy/power reduction for data processing architecture. While compressive sensing provides great potential for biomedical instrumentation, it requires careful implementation. For example, it is still being debated whether data created by a single pixel camera can provide good randomly sampled data.

Over the past several years, much effort has been expended on analog-to-information conversion (AIC) [14-19], i.e., to acquire raw data at a low rate while accurately reconstructing the compressed signals. The key components under investigation were analog-todigital converters, random filtering, and demodulation. Hasler et al. [20-24] were first ones to apply compressive sensing to pixel array data acquisition systems. In the traditional data flow, the A/D converter is placed right after the pixel array. That is, the pixel data are directly digitized at the Nyquist sample rate. When a compressive sensing algorithm is applied, the A/D converter is placed after the random selection/demodulation and the sample rate is significantly slower. Even though compressive sensing algorithms help reduce the sample rate of the A/D converter, it comes with a price. It requires an analog front end to achieve randomized measurements which, in turn, leads to large analog computing units at the front end. These components are cumbersome and slow. For example, an analog multiplier works at 10 MHz with over 200 ns setup time. While most elements in the front end use the 0.25 μm technology node, some exploit the 0.5 μ technology node (i.e. floating gate technology to store random selection coefficients).

By using compressive sensing to reduce the sample rate of the A/D converter, it appears that we are moving away from the current technology trend (i.e. smaller feature size transistors to achieve higher speed and lower power). Instead, we rely heavily on analog designs and computations which have difficulties in scaling. Little is known on how to build circuits that can create “good” measurement matrices. Here, “good” not only refers to effective selection matrices, but also includes circuit implementation costs such as power and space requirements. In addition, the high complexity of reconstruction algorithms demands high performance computing capabilities. Our recent implementation [25] showed that it is possible to use a level-crossing sampling approach to replace Nyquist sampling. With a new in-memory design, the new compressive sensing based biomedical instrumentation performs digitization only when there is enough variation in the input and when the random selection matrix chooses this input. This new implementation also can be applied to a much wider range of applications including real-time applications like telemedicine and remote monitoring. Additional work such as Yoo et al., [26] also indicated that it is possible to integrate compressive sensing in the A/D converter.

References

- Donoho DL (2006) Compressed sensing. IEEE TransInform Theory 52:1289-1306

- Candes E, Romberg J, Tao T (2006) Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans Inform Theory 52:489-509

- Candès EJ, Romberg JK, Tao T (2006) Stable signal recovery from incomplete and inaccurate measurements. Comm Pure Appl Math 59:1207-1223.

- Candès E, Romberg J (2006) Encoding the lpball from limited measurements. Data Compression Conference, California.

- Boufounos P, Baraniuk R (2007) Quantization of sparse representations. Rice Univ Houston TxDeptof Electrical and Computer Engineering.

- Boufounos R, Baraniuk RG (2007) Sigma Delta Quantization for Compressive Sensing. Wavelets XII in SPIE International Symposium on Optical Science and Technology, California.

- P. Boufounos and R. G. Baraniuk (2008) 1-bit compressive sensing. Info. Sciences and Systems (CISS), Princeton, New Jersey.

- Goyal V, Fletcher A, Rangan S (2008) Compressive sampling and lossy compression. IEEE Signal Processing Magazine 25: 48-56.

- Jacques L, Hammond D, Fadili JM (2011) Dequantizing compressed sensing: When oversampling and non-Gaussian constraints combine. IEEE Trans. Inform. Theory 57.

- Dai W and Milenkovic O (2009) Subspace pursuit for compressive sensing signal reconstruction. IEEE Trans Inform Theory 55:2230-2249.

- Needell D, Tropp JA (2009) CoSaMP: Iterative signal recovery from incomplete and inaccurate samples. Applied and Computational Harmonic Analysis26:301-321

- http://www.ti.com/lit/ds/symlink/ads1258.pdf

- http://dsp.rice.edu/cscamera

- Lin M(2010) Analogue to Information System based on PLL-based Frequency Synthesizers with Fast Locking Schemes. The University of Edingburgh.

- Prelcic NG (2010) State of the art of analog to information converters. Keynote presentation, Institute of Electronics and Informatics Engineering of Aveiro.

- Mishali M, Hilgendorf R, Shoshan E, Rivikin I, Eldar YC (2011) Generic Sensing Hardware and Real-Time Reconstruction for Structured Analog Signals. ISCAS.

- Mishali M, Eldar YC, Elron AJ (2011) Xampling: Signal acquisition and processing in union of subspaces.IEEE transactions on Signal Processing 59:4719-4734.

- Eldar YC (2009) Compressed sensing of analog signals in shift-invariant spaces. IEEE Trans. Signal Process 57:2986-2997.

- Vetterli M, Marziliano P, Blu T (2002) Sampling signals with finite rate of innovation. IEEE Trans. Signal Process 50:1417-1428.

- Bandyopadhyay A, Lee J, Robucci RW, Hasler P Matia (2006)MATIA: A Programmable 80 µW/frame CMOS Block Matrix Transform Imager Architecture. IEEE Journal of Solid-State Circuits 41:663-672.

- Bandyopadhyay A, Hasler P (2003) A fully programmable CMOS block matrix transform imager architecture. IEEE Custom Integrated Circuits Conf. (CICC), San Jose, California.

- Bandyopadhyay A, Hasler P, Anderson D (2005) A CMOS floating-gate matrix transform imager. IEEE Sensors. 5:455-462.

- Hasler P, Bandyopadhyay A, Anderson DV (2003) High fill-factor imagers for neuromorphic processing enabled by floating gates. EURASIP J. Appl. Signal Process. 7:676-689.

- Srinivasan V, Serrano GJ, Gray J, Hasler P (2005) A precision CMOS amplifier using floating-gates for offset cancellation. IEEE Custom Integrated Circuits Conf. (CICC).

- Powers SL, Roveda JM (2015) Compressive Sensing Systems and Related Methods. UA14-046, Patent application, UNIA 15.09 PCT.

- Yoo J, Becker S, Monge M, Loh M, Candes E (2012) Design and Implementation of a fully integrated compressed-sensing signal acquisition system. 2012 International conferences on Acoustics, Speech and Signal Processing, Brisbane.

Relevant Topics

- Amperometric Biosensors

- Biomedical Sensor

- Bioreceptors

- Biosensors Application

- Biosensors Companies and Market Analysis

- Biotransducer

- Chemical Sensors

- Colorimetric Biosensors

- DNA Biosensors

- Electrochemical Biosensors

- Glucose Biosensors

- Graphene Biosensors

- Imaging Sensors

- Microbial Biosensors

- Nucleic Acid Interactions

- Optical Biosensor

- Piezo Electric Sensor

- Potentiometric Biosensors

- Surface Attachment of the Biological Elements

- Surface Plasmon Resonance

- Transducers

Recommended Journals

Article Tools

Article Usage

- Total views: 13305

- [From(publication date):

specialissue-2015 - Apr 02, 2025] - Breakdown by view type

- HTML page views : 12254

- PDF downloads : 1051