Brain Tumour Segmentation and Diagnosis using Multiscale CNNs

Received: 01-May-2023 / Manuscript No. jcd-23-92089 / Editor assigned: 04-May-2023 / PreQC No. jcd-23-92089 / Reviewed: 18-May-2023 / QC No. jcd-23-92089 / Revised: 22-May-2023 / Manuscript No. jcd-23-92089 / Accepted Date: 29-May-2023 / DOI: 10.4172/2476-2253.1000174

Abstract

Clinics must be able to identify and diagnose brain tumours early. Hence, accurate, effective, and robust segmentation of the targeted tumour region is required. In this article, we suggest a method for automatically segmenting brain tumours using convolutional neural networks (CNNs). Conventional CNNs disregard global region features in favour of local features, which are crucial for pixel detection and classification. Also, a patient’s brain tumour may develop in any area of the brain and take on any size or shape. We created a three-stream framework called multiscale CNNs that could incorporate data from various scales of the regions surrounding a pixel and automatically find the top-three scales of the image sizes. Datasets from the MICCAI 2013-organized Multimodal Brain Tumor Image Segmentation Benchmark (BRATS) are used for both testing and training. The T1, T1-enhanced, T2, and FLAIR MRI images’ multimodal characteristics are also combined within the multiscale CNNs architecture. Our framework exhibits improvements in brain tumour segmentation accuracy and robustness when compared to conventional CNNs and the top two techniques in BRATS 2012 and 2013.

Keywords

Brain tumor segmentation; Convolutional neural network; Self-attention; Cross-channel attention

Introduction

Uncontrolled growth of a solid mass, called a brain tumour, is caused by abnormal cells present throughout the brain. Malignant tumours and benign tumours are two categories. Primary tumours and metastatic tumours are both types of malignant tumours. Adult gliomas, which originate from glial cells and penetrate the surrounding tissues, are the most prevalent type of brain tumour. Whereas patients with high grade gliomas should only expect a two-year life extension, those with low grade gliomas can expect a life extension of several years. According to estimates, there will be 23,000 new cases of brain cancer in the United States alone in 2015. In the meantime, the number of patients receiving a diagnosis is rising quickly each year [1].

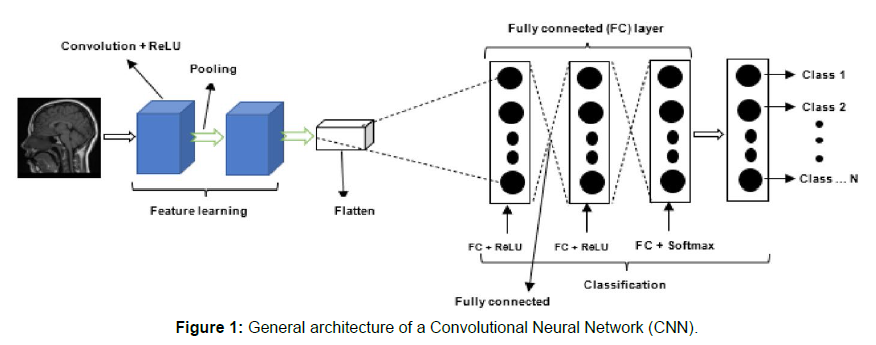

The most common therapies for brain tumours are generally surgery, radiation therapy, and chemotherapy. Although the former seeks to resect and treat tumours, the latter two strive to halt the growth of malignancies. As a result, it is crucial to identify brain tumours early on. In addition, precise segmentation and location of the brain tumour are crucial for therapy planning. Magnetic Resonance Imaging (MRI), one of the several imaging modalities, provides the most comprehensive view of the brain and is the test used most frequently to identify brain malignancies [2]. Proton density-weighted MRI (PDw), Fluid- Attenuated Inversion Recovery (FLAIR), T1-weighted MRI (T1w), T1- weighted MRI with contrast enhancement (T1wc), T2-weighted MRI (T2w), and so on are all types of MRI. MRI images from various types of machines have varying grey scale values compared to Computed Tomography (CT) images Shown in (Figure 1). Any part of the brain can develop gliomas, which can vary in size and shape. Gliomas are infiltrative tumours as well, making it challenging to tell them apart from healthy tissues. In order to overcome the issues outlined above, various data from various MRI modalities should be merged [3].

No optimal algorithm has been discovered despite decades of research to find effective techniques for brain tumour segmentation. In addition, the majority are based on traditional machine learning techniques or segmentation techniques for different architectures. These techniques either rely on manually created specialised features or give poor segmentation results when the environment around the tumour is diffuse and poorly contrasted. Although not modifying the domain of brain tumours, methods based on hand-designed specialised features must calculate a large number of features and leverage broadly edge-related information. Moreover, typical features produced using picture gradients, Gabor filters, the Histogram of Oriented Gradients (HoG), or Wavelets perform poorly, especially when the borders between tumours and healthy tissues are hazy. Hence, creating robust feature representations that are task-adapted is crucial for segmenting challenging brain tumours [4, 5].

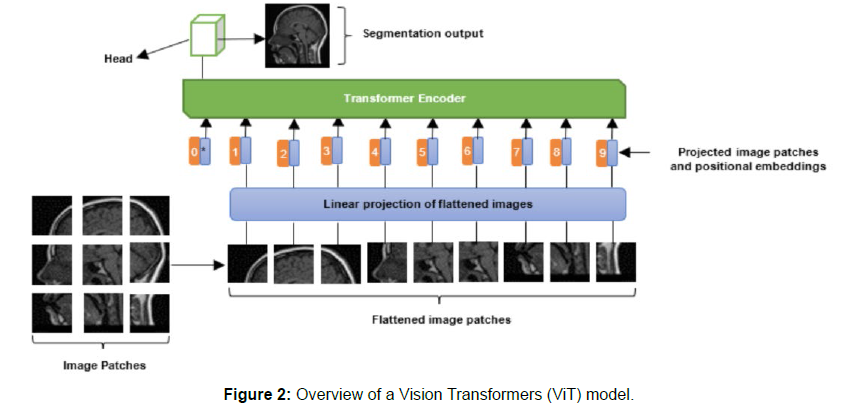

Convolutional neural networks (CNNs) as supervised learning techniques have recently demonstrated benefits at automatically learning the hierarchy of complicated features from in-domain data and achieved promising results in mitotic detection, facial and MINST database identification, and other areas. Also, while they are not frequently utilised in brain tumour segmentation tasks, CNNs have demonstrated successful application to segmentation difficulties. Traditional standard CNNs, however, simply pay attention to local textual properties. As a result, some crucial global aspects unavoidably disappear [6]. We suggest a unique CNNs design for brain tumour segmentation that combines all of these aspects because both local and global features are significant in image recognition tasks. The multiscale notion is introduced to our previously constructed conventional singlescale CNNs in this architecture. Both local and global features are recovered by fusing multiscale CNNs. By combining the knowledge gained by each CNN, the pixel classification is predicted. Moreover, multimodality MRI pictures are simultaneously trained to use complimentary information. With multiscale CNNs, experiments have produced positive tumour segmentation findings shown in [7] (Figure 2).

Second only to tumours of the lung, stomach, uterus, and breast in terms of prevalence, brain tumours are a broad term for tumours of the nervous system that develop inside the skull. They account for around 5% of systemic cancers, 70% of juvenile tumours, and more than 2.4% of fatalities. One of the most often utilised diagnostic methods in clinical treatment is magnetic resonance imaging (MRI), which is crucial in the identification of brain cancers. It is noninvasive, reliably identifies the location, size, and shape of brain tumours without subjecting patients to intense ionising radiation, has strong soft tissue contrast, and is noninvasive. For the purposes of illness diagnosis, pathological investigation, and subsequent surgical plan determination, accurate segmentation of brain tumours is crucial [8].

While correct segmentation of brain tumours is necessary for clinical research, it is frequently difficult due to issues such picture artefacts, noise, low contrast, and significant case-to-case differences in tumour shape, size, and location. Additionally, because the internal architecture of brain tumours is similar and the boundaries between their various parts are hazy, segmenting them is more difficult. It takes a lot of effort to manually segment brain tumours, which is dependent on the doctor’s skill and knowledge. For this reason, research into a technique that can automatically, precisely, and successfully segment brain tumours is extremely important for both clinical diagnosis and surgery [9].

Deep learning has had a string of achievements recently in a variety of areas, including image, audio, and natural language. Convolutional neural networks (CNNs) are a key component in computer vision applications, and semantic picture segmentation has advanced significantly in recent years. In the course of training, CNNs discover visual and semantic properties in images, simplifying the network model and enabling in-depth network training. In conclusion, deep CNNs have several uses in the processing of medical images [10]. The image segmentation approach based on deep learning can be classified into block segmentation and end-to-end segmentation, with the latter being primarily implemented through the encoder-decoder structure, depending on the various input and output methods. To achieve the goal of tumour region segmentation, the entire image or an image block is entered, and the type probability of each pixel in the output image is decoded [11].

The appropriate model for this technique is mostly based on the U-Net network, which suggests a symmetric topology with jump connections to maintain picture information and has become the standard framework for the majority of image segmentation tasks. Although the enhanced method based on U-Net enhances segmentation performance, network depth and generalisation still need to be improved. To balance the network’s depth and performance, the idea of identity mapping has just been developed. Nevertheless, using residual blocks to change the channel count causes the number of channels to significantly rise, which leads to data redundancy [12].

Discussion

The segmentation outcomes of conventional image processing algorithms in the recently suggested glioma segmentation approach primarily rely on manual involvement, and a priori limitations are needed to ensure the segmentation. The machine learning-based glioma segmentation approach relies on manually selecting image features, which means that the segmentation effect of this kind of method depends on artificial features and the segmentation algorithm’s capacity to generalise is poor [13].

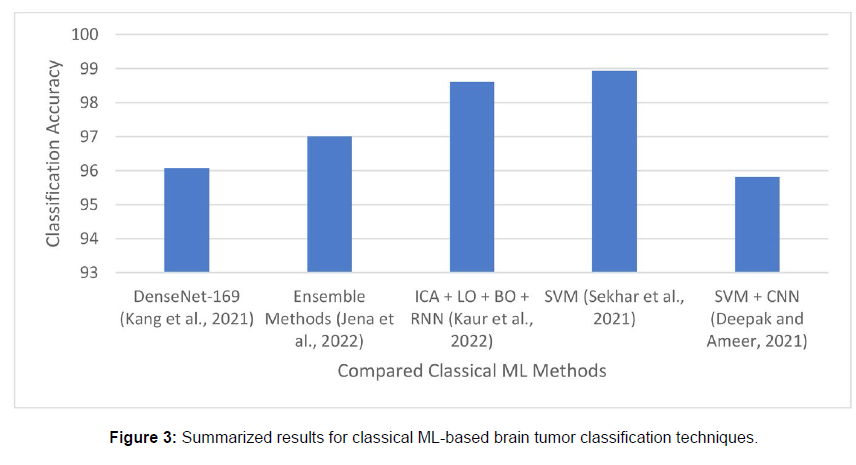

The deep learning-based glioma segmentation method can automatically extract image features using a neural network model and separate the glioma region. Hence, the above method’s drawbacks of severe prior limitations and manual intervention are eliminated. In large-scale complex glioma segmentation circumstances, the automation and robustness of the segmentation method have increased, and good segmentation results are now possible, the flow of the deep learning-based glioma segmentation algorithm. The procedure may be broken down into the following steps: first, get an MRI of the patient’s brain to use as the algorithm’s input data; next, separate the input data into the training set, verify set, and test set. The divided data must also be pre-processed because of issues with noise and uneven intensity in the original brain MRI shown in [14] (Figure 3).

Methods for pre-processing glioma images that are often utilised include offset correction, intensity standardisation, skull removal, and image registration. Next, train the deep learning model using the input data that has already been processed. The deep model will automatically extract features throughout the training phase and add them to the intended model structure for forward propagation. In order to achieve the goal of optimal model performance, the multiregional glioma mask is employed simultaneously as a label to calculate the loss value, and the model parameters are then reverse-adjusted in numerous iterations. The performance of the model is then assessed using a variety of evaluation indicators at the conclusion and the models that satisfy the requirements of the indicators are stored. The test set data is then segmented using the highly assessed model to provide the final glioma segmentation findings [15-20].

Conclusion

The majority of current brain tumour segmentation techniques are based on the FCN and U-Net networks, although the FCN network connection is not fine-grained and disregards the interaction between various pixel points. Experimental evidence suggests that the U-Net model is marginally superior to the FCN model, although the overall generality of prediction findings needs to be strengthened to a certain extent. This paper suggests a DRT-Unet network for the precise segmentation of brain tumours in order to address these issues. It uses four MRI modality images as input and a dilated convolution block to enlarge the perceptual field during coding in order to provide the network with richer and more detailed feature information.

When low-level visual features are propagated and preserved during the coding process, a dense block of local feature residual fusion is used to lessen information loss during deep network training. To perform the decoding process of feature map size enlargement, the DRT-Unet network uses a dense block of local feature residual fusion as well as a DE convolution structure of up-pooling and transposed convolution cascade. The global and granular elements of the image can be recovered in large part thanks to up-pooling and transposed convolution. The DRT-Unet method can effectively segment brain tumour lesions, as can be observed from the studies in this study and the comparison with other approaches. Also, the suggested network in this research performs better in terms of aesthetic effects and objective indexes when compared to the other four segmentation approaches.

Conflicts of Interest

None

Acknowledgment

None

References

- Jedrychowski W, Galas A, Pac A (2005) Prenatal ambient air exposure to polycyclic aromatic hydrocarbons and the occurrence of respiratory symptoms over the first year of life. European Journal of Epidemiology 20: 775-782.

- Liang J, Cai W, Feng D, Teng H, Mao F, et al. (2018) Genetic landscape of papillary thyroid carcinoma in the Chinese population. J Pathol 244: 215-226.

- Liu RT, Chen YJ, Chou FF, Li CL, Wu WL, et al. (2005) No correlation between BRAFV600E mutation and clinic pathological features of papillary thyroid carcinomas in Taiwan. Clin Endocrinal (Oxf) 63: 461-466.

- Raghu G, Nyberg F, Morgan G (2004) the epidemiology of interstitial lung disease and its association with lung cancer. British Journal of Cancer 91: 3-10.

- Travis WD, Brambilla E, Nicholson AG, Yatabe Y, Austin JHM et al (2004) Classification of Lung Tumors: Impact of Genetic, Clinical and Radiologic Advances Since the 2004 Classification. J Thorac Oncol 10: 1243-1260.

- Siegel RL, Miller KD, Jemal A (2015) Cancer statistics. J Clin 65: 5-29.

- Austin JH, Yip R, D'Souza BM, Yankelevitz DF, Henschke CI, et al. (2012) International Early Lung Cancer Action Program Investigators. Small-cell carcinoma of the lung detected by CT screening: stage distribution and curability. Lung Cancer 76: 339-43.

- Hou JM, Krebs MG, Lancashire L, Sloane R, Backen A, et al. (2012) Clinical significance and molecular characteristics of circulating tumor cells and circulating tumor micro emboli in patients with small-cell lung cancer. J Clin Oncol 30: 525-32.

- Shamji FM, Beauchamp G, Sekhon HJS (2021) The Lymphatic Spread of Lung Cancer: An Investigation of the Anatomy of the Lymphatic Drainage of the Lungs and Preoperative Mediastinal Staging. Thorac Surg Clin 31: 429-440.

- Shamji FM, Beauchamp G, Sekhon HJS (2021) The Lymphatic Spread of Lung Cancer: An Investigation of the Anatomy of the Lymphatic Drainage of the Lungs and Preoperative Mediastinal Staging. Thorac Surg Clin 31: 429-440.

- Knox EG (2004) Childhood cancers and atmospheric carcinogens. Journal of Epidemiology and Community Health 59: 101-105.

- Yang H, Wang XK, Wang JB (2022) Combined risk factors and risk of upper gastrointestinal cancer mortality in the Linxian general population. International Journal of Cancer.

- Gao D, Lu P, Zhang N (2022) Progression of precancerous lesions of esophageal squamous cell carcinomas in a high-risk, rural Chinese population. Cancer Medicine.

- Bastos AU, Oler G, Nozima BH, Moyses RA, Cerutti JM, et al. (2015) BRAF V600E and decreased NIS and TPO expression are associated with aggressiveness of a subgroup of papillary thyroid microcarcinoma. Eur J Endocrinol 173: 525-540.

- Zoghlami A, Roussel F, Sabourin JC, Kuhn JM, Marie JP, et al. (2014) BRAF mutation in papillary thyroid carcinoma: predictive value for long-term prognosis and radioiodine sensitivity. Eur Ann Otorhinolaryngol Head Neck Dis 131: 7-13.

- Ito Y, Yoshida H, Maruo R, Morita S, Takano T, et al. (2009) BRAF mutation in papillary thyroid carcinoma in a Japanese population: its lack of correlation with high-risk clinic pathological features and disease-free survival of patients. Endocrine journal 56: 89-97.

- Stanojevic B, Dzodic R, Saenko V, Milovanovic Z, Pupic G, et al. (2011) Mutational and clinico-pathological analysis of papillary thyroid carcinoma in Serbia. Endocrine journal 58: 381-393.

- Sahpaz A, Onal B, Yesilyurt A, Han U, Delibasi T, et al. (2015) BRAF(V600E) Mutation, RET/PTC1 and PAX8-PPAR Gamma Rearrangements in Follicular Epithelium Derived Thyroid Lesions- Institutional Experience and Literature Review. Balkan Med J 32: 156-166.

- Siegel RL, Miller KD, Jemal A (2019) Cancer statistics CA Cancer J Clin 69:7-10.

- Chen X, Guo C, Cui W, Sun K, Wang Z, et al. (2020) CD56 Expression Is Associated with Biological Behavior of Pancreatic Neuroendocrine Neoplasms. Cancer Manag Res 12: 4625-4631.

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Google Scholar, Crossref, Indexed at

Citation: Han W (2023) Brain Tumour Segmentation and Diagnosis using Multiscale CNNs. J Cancer Diagn 7: 174. DOI: 10.4172/2476-2253.1000174

Copyright: © 2023 Han W. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Share This Article

Recommended Journals

Open Access Journals

Article Tools

Article Usage

- Total views: 1053

- [From(publication date): 0-2023 - Apr 04, 2025]

- Breakdown by view type

- HTML page views: 831

- PDF downloads: 222