Auditory Cortical Temporal Processing Abilities in Young Adults

Received: 26-Apr-2016 / Accepted Date: 08-Jun-2016 / Published Date: 15-Jun-2016 DOI: 10.4172/2161-119X.1000238

Abstract

Purpose: To evaluate whether cortical encoding of temporal processing ability, using the N1 peak of the cortical auditory evoked potential, could be measured in normally hearing young adults using three paradigms: voice-onsettime, speech-in-noise and amplitude-modulated broadband noise. Research design: Cortical auditory evoked potentials (CAEPs) were elicited using: (1) naturally produced stop consonant-vowel (CV) syllables /da/-/ta/ and /ba/-/pa/; (2) speech-in-noise stimuli using the speech sound /da/ with varying signal-to-noise ratios (SNRs); and (3) 16 Hz amplitude-modulated (AM) BBN presented in two conditions: (i) alone (representing a temporally modulated stimulus) and (ii) following an unmodulated BBN (representing a temporal change in the stimulus) using four modulation depths; (4) Behavioural tests of temporal modulation  transfer function (TMTF) and speech perception using CNC word list were carried out. All stimuli were presented at 65 Db SPL in the sound field. Study sample: Participants were adults (12 Females and 8 Males) aged 1830 years with normal hearing. Results: Results showed: (1) a significant means difference in N1 latency (p<0.05) between /da/ vs. /ta/ and /ba/ vs. /pa/; (2) significant N1 latency prolongation with decreasing signal-to-noise ratios for the speech sound /da/; and (3) the N1 latency did not significantly change for different modulations depths when measured for the AMBBN alone or when following a BBN. Conclusion: Changes in the N1 latency provide a measure of temporal changes in a stimulus for VOT and speech-in-noise. N1 latency could be used as an objective measure of temporal processing ability in individuals with temporal processing disorder who are difficult to assess by behavioural response.

Introduction

Auditory temporal processing ability can be defined as the perception of sound or change in a sound within a defined time [1]. Some have argued that the auditory temporal processing is an essential component of most auditory processing capacity, which can be seen at several levels, ranging from neuronal sensitivity to the effects of stimulus onset time, to cortical processing of auditory information such as speech stimuli [1]. A number of studies have shown further that a sustained disruption to auditory temporal processing in newborns and young children can disrupt the perception of speech stimuli, which can lead to poor phonological processing, and in turn to poor reading and language development [2-4]. In particular, this type of causal relationship has been proposed in cases of auditory processing disorder, dyslexia and specific language impairment (SLI) [1,4,5]. Therefore it is important to identify a temporal processing deficit early to minimize subsequent potential impacts on speech, language and reading development. One of the main limitations in identifying such deficits in infants and young children is that these individuals are unable to provide reliable behavioural responses to measures that assess temporal processing. On the other hand, cortical auditory evoked potential (CAEPs) are now emerging as an instrument that can be used to evaluate temporal changes in sound stimuli [6,7], and are considered to represent the perception of temporal differences.

Research has shown that in normal-hearing individuals the auditory cortical area plays a predominant role in encoding temporal acoustic elements of the speech signal such as voice-onset-time (VOT) [8], speech signals in noise [9] and amplitude modulations [10], all of which are crucial to speech and language processing. When identifying words or sentences, human listeners make use of a temporal cue such as VOT to distinguish between stop consonants that differ in timing of onset. In particular, VOT is represented in the primary and secondary auditory cortical area by synchronized activity that is time-locked to consonant release and voicing onset [11]. VOT corresponds to the duration of the delay between the release of the stop consonant and the start of voicing and this feature is related to the timing cues for VOT perception [11,12]. Voiced syllable-initial stop consonants in English (such as /ba/-/da/) have a short time interval between the release of the consonant and the onset of voicing compared with voiceless stop consonants (e.g., /pa/-/ta/). Studies using synthesized speech tokens with onset times of 0–80 ms representing the continuum between /da/ and /ta/ have demonstrated that a delay of 0−30 ms was identified as the voiced /da/, while stimuli with VOT of 50−80 ms were consistently identified as voiceless /ta/ [13]. Because the 40 ms stimulus was identified as either sound nearly half of the time, it appears that in a normal auditory system the human listener is required to resolve sounds with at least a 20 ms accuracy to differentiate between voiced and voiceless stop consonants.

The ability of the hearing system to detect and resolve a speech signal embedded in competing background noise is critical for successful communication in difficult listening environment. Numerous factors contribute to the capability to hear a signal in the presence of competing noise, including reduced audibility as well as the way in which signals in noise are encoded through the central and peripheral auditory system. In particular, the cortical auditory system has been involved in discriminating stimuli in background noise; that is, the cortical neurons respond primarily to the stimulus onset but do not fire to continuous static background noise [14,15], which suggests that they must resolve timing differences of milliseconds. In other words, the auditory nerve fibres discharge continuously to the masker so that the signal response is a modulation of the firing rate produced by the masker. In cortical neurons, however, there is no steady discharge to the masker, so that the dynamic range of the neurons are available to encode the onset of the signal [14,15]. Thus, cortical neurons are more effective at encoding the signal-to-noise ratio rather than changes in signal intensity per se [16].

Amplitude modulations within speech (as represented by the speech envelope) are important temporal cues for identifying linguistic contrast [17] and conveying sufficient information for its intelligibility. Temporal smearing of these modulations will affect its intelligibility and therefore speech perception [18]. Psychoacoustic studies have demonstrated that the slow rate of modulation frequencies of 4−16 Hz are both necessary and almost sufficient for correct speech identification in quiet and in noise [18,19]. For example, Drullman et al. [19] investigated the effect of temporal smearing on sentence intelligibility and phoneme recognition. They demonstrated that perceiving only modulations above 16 Hz yields almost the same speech perception threshold for sentences in noise; however, for modulations lower than 16 Hz, sentence intelligibility in quiet is heavily affected. In particular, stop consonant speech sounds are affected more than vowels due to their short duration [19]. It seems that to perceive these modulation frequencies within speech requires a high level of temporal resolution in both frequency and time for adequate perception and intelligibility.

Psychoacoustic testing of temporal processing ability, such as voiceonset- time, speech-in-noise and amplitude modulation detection as a function of modulation frequency, have been used behaviourally to evaluate populations with temporal processing deficits such as auditory neuropathy spectrum disorder (ANSD) [7,20-26] and dyslexia [27-30]. These studies reveal abnormal temporal processing ability in a high proportion of individuals with ANSD and dyslexia, which is assumed to underpin the poor speech perception, language and reading skills measured in these populations. Given the difficulties in testing younger populations behaviourally, objective measures of temporal processing using the CAEP would be an advantage in ensuring early detection and remediation.

The CAEP reflects synchronized electroencephalography (EEG) activity in response to sound stimuli and has been found to maintain high temporal resolution [31,32]. The waveform morphology of the CAEP can be defined in terms of amplitude and latency. These voltage changes over time are presumed to result from post-synaptic potentials within the brain, and are influenced by the amount of recruited neurons, synchrony of the neural response and extent of neuronal activation [33]. The CAEP waveforms are made up of several components, including the P1-N1-P2 complex, which reflects synchronous neural activity of structures in the thalamo-cortical portion of the auditory system in response to stimulus onset and other acoustic changes of a continued stimulus [34,35]. That is, the P1-N1-P2 complex could reflect a change from silence to sound (onset response), sound to silence (offset response), or amplitude or frequency modulations of a sustained tone [36]. Alternatively, it may occur in response to changes in ongoing, more complex, sounds such as speech [37-39]. Martin and Boothroyd [38] first coined the phrase “Acoustic Change Complex” (ACC) to describe the cortical response evoked by a change in stimulus, including changes of spectral envelope alone or of periodicity alone with no change in amplitude, or amplitude alone in the absence of changes of spectral envelope or periodicity [40].

Human electrophysiological studies using CAEPs have demonstrated that the auditory N1 latency is sensitive to temporal cues of the acoustic stimuli rather than intensity cues [7,41,42]. Onishi and Davis [41] demonstrated that the latency of N1 in young adults with normal hearing was shortest with fast stimulus rise times and lengthened as rise times were extended. In contrast, when rise time was held constant, changing stimulus intensity had little influence on N1 latency. Two other studies have supported this finding: Michalewski et al. [7] demonstrated that N1 latency in normally hearing young adults was constant over different intensity levels (50–100 dB SPL) and Rapin et al. [42] showed that N1 latency over different intensity levels (30–70 dB SPL) of pure tones was stable.

Data from single neurones in the primary auditory cortex of anaesthetised cats have demonstrated that it is the SNR rather than the absolute level of the sound that is an essential factor affecting the latency of the evoked potentials [14]. Specifically, Phillips and Farmer [14] showed that when the level of noise was increased, the level of tone also had to be increased by the same amount to maintain an equivalent response from a given cortical neuron; these responses are typically transient and time-locked to signal onset. This finding indicates that latency of the evoked potentials is more sensitive in detecting timing cues of the acoustic signal in the presence of noise rather than the intensity cues of that signal. Previous studies have demonstrated the sensitivity of the N1 latency of the CAEP to temporally different stimuli in normally hearing and in temporally disordered populations. Kaplan-Neeman et al. [43] and Whiting et al. [36] measured changes in the N1 latency to speech-in-noise paradigms using different SNRs in young adults with normal hearing, showing that the latency increases with increasing noise levels. Whiting et al. [36], for instance, increased a broadband noise from 50 to 80 dB SPL in 10 dB steps, while recording cortical evoked responses to speech tokens (/da/ and /ba/) presented at 65 and 80 dB SPL. As the noise level increased, N1 latencies increased systematically. In terms of SNR (20 dB to -5 dB), a systematic N1 latency delay was observed; that is, N1 latencies increased as SNR decreased. In other study, Kaplan- Neeman et al. [43] recorded CAEPs in a /da/-/ga/ discrimination paradigm with varying background noise levels (15 dB to -6 dB). The authors observed a systematic increase in N1 latency as the SNRs decreased for both stimuli. Both studies applied an oddball paradigm to evoke the discriminatory P3 cortical response when individuals perceive a target stimulus.

In a temporally disordered population such as those with ANSD, Michalewski et al. [7] measured the passively evoked N1-P1-N2 cortical response to 1 kHz tones and compared this with the response measured in adults with normal hearing. They showed that the N1 latency was prolonged in ANSD and that this prolongation correlated significantly with psychoacoustic testing of gap detection and speech perception. Their findings emphasize the role of the neural synchrony and the degree of auditory nerve responses to the latency N1 component of the CAEP, suggesting that N1 latency may provide a reliable objective testing of auditory temporal processing.

Few studies showed the effect of modulating temporal characteristics of a stimulus on the CAEP. Because of the clinical applicability of this procedure to children with disrupted temporal cues, and the importance of early detection and remediation, this study aimed to develop and evaluate temporal acuity from the auditory cortex by modulating the temporal cues of sound stimuli. In particular, these are changes to voice-onset-time, speech-in-noise ratios and amplitude modulation depths.

Methods

Participants

Twenty young adult participants with normal hearing (8 males, 12 females) aged 18−30 years (mean 25.2, SD 2.2) were recruited. Participants were divided into two groups (A and B) of 10 participants each. Group A participated in voice-onset-time and speech-in-noise testing and group B participated in amplitude- modulation of broadband noise testing to reduce the possibility of participants becoming fatigued by the long test time.

All had pure-tone air conduction thresholds ≤ 15 dB HL at octave frequencies from 250 Hz to 8 kHz with normal tympanograms, no history of hearing or speech problems and noise exposure, and no reported previous history of reading or learning problems. All participants included in this study had speech perception scores ≥ 96% using CNC word lists (open-set-speech perception) and normal temporal processing, evaluated using a Temporal Modulation Transfer Function (TMTF) test, the averaged mean was -19 dB (± 1.48 dB).

Procedure

Air and bone conduction pure-tone thresholds were determined using a calibrated clinical audiometer (AC33 Interacoustics two channels) using a modified version of the Hughson and Westlake procedure [44]. Tympanometry used a calibrated immittance meter (GSI-Tymp star V2, calibrated as per ANSI, 1987). Tympanograms were obtained for a 226 Hz probe tone. A Consonant-Nucleus- Consonant (CNC) word test was used to assess open-set speech perception ability.

Temporal modulation transfer function (TMTF)

To develop the stimuli for the TMTF test, two sounds were generated: un-modulated broadband noise (BBN) and amplitude modulated BBN, of 500 ms duration with a rise/fall (ramp) of 20 ms. The stimuli were generated using a 16-bit digital to analog converter with a sampling frequency of 44.1 kHz and low pass filtered with a cut off frequency of 20 kHz. The modulated BBN stimuli were derived by multiplying the BBN by a dc-shifted sine wave. Modulation depth of the AMBBN (fm 16 Hz) stimuli was controlled by varying the amplitude of the modulating sine wave [27,45].

Amplitude modulation detection threshold at low modulation rate (fm 16 Hz) was obtained using an adaptive two down one up, forced choice procedure (2I-2AFC) that estimates modulation depth necessary for 70.7% correct detection [46]. The participants’ task was to identify the interval containing the modulation. No feedback was given after each trial. The step size and thresholds of modulation were based on the modulation depth in decibels (20 log m, where m refers to depth of modulation). The step size of modulation was initially 4 dB and reduced to 2 dB after two reversals. The mean of the last three reversals in a block of 14 were taken as threshold. The poorest detection threshold that could be measured was 0 dB, and corresponded to an AM of one (100% modulation depth); the more negative the value of 20 log m, the better the detection threshold. The Stimuli were played in a computer (a PC Toshiba); the participant received the output of the stimuli that were calibrated using Bruel and Kjaer SLM type 2250, microphone number 419 presented at 65 dB SPL sound field.

Voice-onset-time (VOT)

The main aim of this test was to determine the perceptual distinction between voiced and voiceless stop consonant-vowelsyllables electrophysiologically. Four naturally produced stop consonant-vowel-syllables /da/-/ta/ and /ba/-/pa/ were recorded by an Australian female speaker. These speech stimuli were selected to allow comparison between the current study and the numerous studies in which these stimuli have been used [13,39,47-51]. The speech stimuli used in those previous studies, however, were synthesized, whereas the speech stimuli used in the current study were naturally recorded, resulting in differences between the formant frequencies and the voicing time (see Table 1). In addition, it has been recommended that naturally recorded speech be used for evoked potential research, since the aim is to apply results to speech perception in everyday life [52].

| Stimulus stop CV | VOT (ms) | Formant frequency (Hz) |

|---|---|---|

| /da/ | 0,03 | F0: 180.7 |

| F1: 816.1 | ||

| F2: 1465.8 | ||

| ta/ | 0,95 | F0: 182.0 |

| F1: 801.3 | ||

| F2: 1300.9 | ||

| /ba/ | 0,06 | F0: 174.8 |

| F1: 797.6 | ||

| F2: 1199.8 | ||

| /pa/ | 0,67 | F0: 189.0 |

| F1: 785.1 | ||

| F2: 1143.6 |

Table 1: Details of the stimuli, stimulus type, VOT and formant frequency.

Speech stimuli were recorded using an AKG C535 condenser microphone connected to a Mackie sound mixer, with the microphone positioned 150 mm in front and at 45 degrees to the speaker’s mouth. The mixer output was connected via an M-Audio Delta 66 USB sound device to a Windows computer running Cool Edit audio recording software and captured at 44.1 KHz 16 bit wave format. All speech stimuli were collected in a single session to maintain consistency of voice quality.

After selection and recording, speech stimuli were modified using Cool Edit 2000 software. All speech stimuli of 200 ms duration were ramped with 20 ms rise and fall time to prevent any audible click arising from the rapid onset or offset of the waveform.

The inter-stimulus interval (ISI), calculated from the onset of the preceding stimulus to the onset of the next stimulus was 1207 ms, as it has been shown that a slower stimulation rate results in more robust CAEP waveforms in immature auditory nervous systems [53].

Speech-in-noise

The main aim of the speech-in-noise test (varying SNRs) was to determine the effect of signal-to-noise ratio (SNR) on the CAEP, specifically the N1 latency, in an effort to further our understanding of how noise affects responses to the temporal cues of the speech signal. We developed varying signal-to-noise ratios with the speech stimulus /da/ to measure this ability. The speech stimulus /da/ was naturally recorded by an Australian female speaker using an AKG C535 condenser microphone connected to a Mackie sound mixer, with the microphone positioned 150 mm in front and at 45 degrees to the speaker’s mouth. The mixer output was connected via an M-Audio Delta 66 USB sound device to a Windows computer running Cool Edit audio recording software and captured at 44.1 KHz 16 bit wave format. A speech stimulus was of 60 ms duration and ramped with a 20 ms rise and fall time to prevent any audible click arising from the rapid onset or offset of the waveform.

After a speech stimulus was selected and recorded, the broadband noise of 600 ms was generated using Praat software, which changed the signal-to-noise ratio using Matlab software with respect to the 65 dB SPL /da/ sound and then combined them to create a /da/ embedded in different noise levels. Noise levels were 45, 55, 60, 65, 70, 75 and 85 dB SPL. These noise levels were chosen to create seven signal-to-noise ratios (SNRs) (Quiet (+20 dB), +10 dB, +5 dB, 0 dB, -5 dB, -10 dB, -20 dB). The inter-stimulus interval (ISI), calculated from the onset of the preceding stimulus to the onset of the next stimulus was 1667 ms.

Amplitude modulation (AM) of broadband noise

The main aim of this test was to measure the change in the N1 latency of the cortical response to an amplitude-modulated signal. Two stimuli were used: (i) a 300 ms amplitude-modulated broadband noise to determine whether the cortical response was sensitive to temporal changes in a single stimulus and (ii) a 600 ms un-modulated broadband noise followed by a 300 ms amplitude modulated broadband noise to determine whether the cortical response was sensitive to a temporally different stimulus. The modulation frequency was 16 Hz, and all stimuli had a 20 ms rise and fall time. The stimuli were generated using a 16-bit digital-to-analog converter with a sampling frequency of 44.1 kHz and low pass filtered with a cutoff frequency of 20 kHz. The depth of the modulation was controlled by varying the amplitude of the modulating sine wave. The inter-stimulus interval (ISI), calculated from the onset of the preceding stimulus to the onset of the next stimulus was 1307 ms for the first condition and 1907 ms for the second condition.

Stimulus presentation

All stimuli used in these procedures were presented at 65 dB SPL (as measured at the participant’s head), which approximates normal conversational level. It was confirmed with each participant that this level was at a loud but comfortable listening level. Presentation was via a loudspeaker speaker placed 1 m from the participant’s seat at 0 azimuth.

Set-up

Participants sat on a comfortable chair in a quiet room at Electrophysiology Clinic and watched a DVD of their own choice. The volume was silenced and subtitles were activated to ensure that participants would be engaged with the movie and pay no attention to the stimuli. All participants were instructed to be relaxed, pay no attention to the sounds being presented and not to fall asleep.

Data acquisition

A NeuroScan and 32-channel NuAmps evoked potential system was used for evoked potential recording. All sounds were presented using Neuroscan STIM 2 stimulus presentation system.

Recording

Evoked potentials were recorded in continuous mode (gain 500, filter 0.1−100 Hz) and converted using analog-to-digital sampling rate of 1000 Hz using scan (version 4.3) via gold electrodes placed at C3, C4, Cz with reference electrode A2 on the right mastoid bone and ground on the contralateral ear on the mastoid bone.

Adults participated in two 2 h recording sessions, including the electrode application and CAEP recording. None of the participants showed signs of fatigue during the testing. All sound levels were calibrated using Bruel and Kjaer SLM type 2250, microphone number 419.

Off-line data analysis

EEG files with a -100 to 500 ms time window were obtained from the continuous file. Any responses on scalp electrodes exceeding ± 50 μV were rejected. Prior to averaging, EEG files were baseline corrected using a pre-stimulus period (-100 ms). Averaging was digitally band pass filtered offline from 1 to 30 Hz (zeroshift, 12 dB/octave) in order to enhance detection of the CAEP components and smooth the waves for the final figures. For each participant, the individual grand average waveform was computed, visually identified and subjected to suitable statistical analyses using SPSS (version 18) to investigate the aims of the current study. The smaller groups of participants necessitated the use of non-parametric analysis.

Results

/da/ vs. / ta/

The Wilcoxon Signed-Rank test was performed to compare the mean N1 latency of the stop CV voiced /da/ vs. voiceless /ta/. Also referred to as the Wilcoxon matched pairs signed rank test, this is the non-parametric alternative to the repeated measures t-test. Results show a significant mean difference in N1 latency between the two conditions (Z=-2.814, p<0.05).

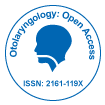

There was an early N1 latency for the stop CV voiced /da/ and later N1 latency for the stop CV voiceless /ta/ as shown in Figure 1A. For example, the mean N1 latency for /da/ was 99 ms (± 4.36 ms) and the mean N1 latency for /ta/ was 108 ms (± 7.20 ms) as shown in Figure 1C.

/ba/ vs. /pa/

The Wilcoxon Signed-Rank test performed to compare the mean N1 latency of the stop CV voiced /ba/ vs. the stop CV voiceless /pa/ showed a significant mean difference between /ba/ vs. /pa/ (Z=-2.809, p<0.05). There was an early latency for N1 for the stop CV voiced /ba/ and later N1 latency for the stop CV voiceless /pa/ as shown in Figure 1B. For example, the mean N1 latency for /ba/ was 100 ms (± 11.21 ms) and the mean N1 latency for /pa/ was 106 ms (± 9.72 ms) as shown in Figure 1C.

Speech-in-noise

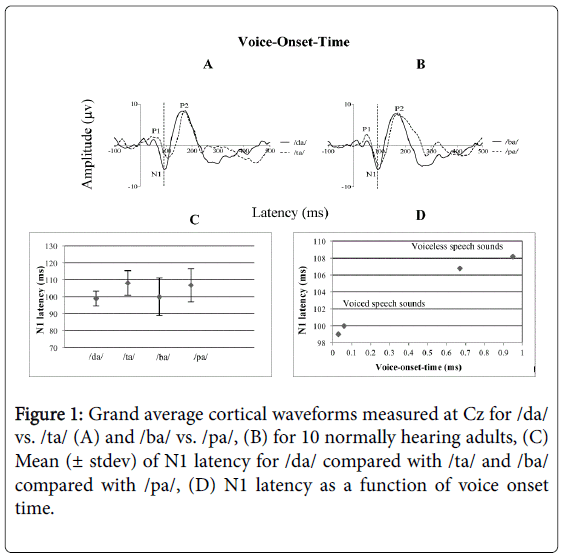

The Friedman Test (the non-parametric alternative to the one-way repeated measures analysis of variance) was performed to compare the mean N1 latencies of all SNR conditions. Results indicated a statistically significant difference in N1 latency of the CAEP across the SNR conditions (Chi square [5]=36.037, p<0.05) as shown in Figures 2A and 2B. Inspection of the mean values showed an increase in N1 latency of the CAEP from signal-to-noise levels of +20 dB 96.0 ms (± 5.41 ms), +10 dB 102.6 ms (± 8.85 ms), +5 dB 118.3 ms (± 13.17 ms), 0 dB 123.5 ms (± 15.27), -5 dB 129.1 ms (± 15.80 ms) and -10 dB 145.3 ms (± 18.72 ms). No observable response was measured at -20 dB for all participants.

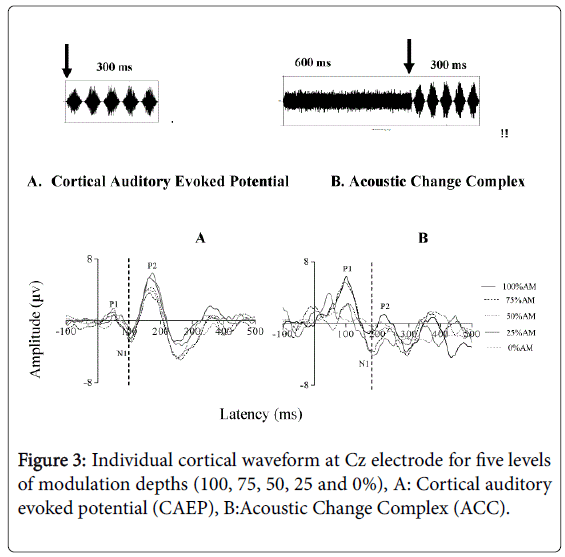

Amplitude-modulation (AM) of broadband noise

The aim of this test was to objectively assess the sensitivity of normal-hearing listeners to amplitude-modulation (AM) of broadband noise, important for speech intelligibility [28]. Two conditions were evaluated to measure the temporal processing ability of normally hearing adults: (i) 300 ms AM-BBN and (ii) 300 ms AM-BBN following a 600 ms BBN. The Friedman Test was performed to compare the mean N1 latency of all modulation depths. Results indicated no statistically significant difference in N1 latency of the CAEP across the five modulation depths for the AM-BBN stimulus alone (100%, 75%, 50%, 25%, 0% AM) (Chi square [4]=6.85, p>0.05) as shown in Figure 3A, although slight non-significant and nonsystematic differences in N1 latency were observed. Similarly, for the responses measured to the AM-BBN following a BBN, results indicated no statistically significant difference in N1 latency of the CAEP across the modulation depth levels (100%, 70%, 50%, 25%, 0%AM) (Chi square [4]=4.161, p>0.05). In this test condition, when the unmodulated BBN was followed by AM-BBN with 0% amplitudemodulation depth, there was no identifiable N1 component for all participants in the study, as shown in Figure 3B.

Further analysis was carried out using a Wilcoxon Signed-Rank Test to compare the difference between two conditions (300 ms AM-BBN versus 300 ms AM-BBN following a 600 ms BBN) tested at each depth separately. Results show a statistically significant difference in N1 latency between the two conditions at 100% (Z=-2.706, p<0.05), at 75% (Z=-2.708, p<0.05), at 50% (Z=-2.705, p<0.05) and at 25% (Z=-2.707, p<0.05).

Discussion

The aim of this study was to develop and evaluate three electrophysiological measures of temporal processing in normally hearing adults with normal temporal processing abilities measured using TMTF. As previous studies had shown that the N1 latency of the CAEP was more sensitive to the neural timing of the acoustic stimuli than other CAEP components, changes in its latency formed the basis of the study. Overall, we observed a systematic difference in N1 latency for changes in voice-onset time and signal-to-noise ratios but not for the amplitude-modulated BBN presented alone or following an unmodulated BBN. Despite this pattern of results, significant differences of the N1 latency were measured for the AM-BBN alone compared with the same signal following a BBN when tested at each depth separately.

Voice-onset-time

The main finding from the VOT temporal measure was that the latency of N1 cortical responses for the stop voiced CVs /da/ and /ba/ was earlier than for the stop voiceless CVs /ta/ and /pa/ suggesting that normally hearing adults coded the voiced and voiceless stop CV syllables differently. A possible reason for the early latency for the stop voiced CVs /da/ and /ba/ and later latency for the stop voiceless /ta/ and /pa/ is that the voiced and voiceless stop CVs are encoded differently at the cortical level in normal-hearing listeners because of the neural timing differences (temporal spacing) between the two speech stimuli [8]. In the human auditory cortex these temporal cues are represented by the synchronized responses of neuronal populations timed-locked to the onset of the acoustic stimulus [11,47,54]. That is, the CAEP has time-locked components with latencies that are determined by the temporal cues of these speech stimuli.

Our results are consistent with previous studies that have found an early CAEP latency for stimuli with shorter VOT, such as /da/ and /ba/, and later CAEP latency for stimuli with longer VOT, such as /ta/ and /pa/ [13]. In addition, our results support those of previous studies that have proposed N1 latency is sensitive to the neural timing of the acoustic stimuli and can be used as an objective test to evaluate perceptual dysfunction in a disordered population with poor temporal processing ability.

The temporal cue of sounds such as VOT is important for speech perception. For example, a voiced stop consonant in word-initial position such as in /da/ is distinguished from its voiceless counterpart /ta/ by temporal cues. That is, the distinction between these two syllables is the interval between consonant release and the onset of voicing.

Psychoacoustical studies have established the importance of VOTs as a temporal cue for the perceptual discrimination of voiced from voiceless speech sounds. The perception of voicing onset depends mainly on voice-onset-time and the short formant transition from consonant to vowel [55]. Individuals with temporal processing deficits, such as those with ANSD, have been found to have poor perception of voicing onset of stop consonant-vowel syllables. For example, Narne and Vanaja [24] found that individuals with ANSD have speech perceptual errors involving stop consonants (e.g., /d/-/t/) which are distinguished by voice-onset-time, thus perceiving the voiced sound /d/ as voiceless /t/ [24]. VOTs are short acoustic events that have been shown to be important in differentiating stop consonants [56], and the poor perception of voicing onset in individuals with ANSD may reflect their difficulty in detecting the duration of these short acoustic events due to temporal processing disruption [57]. Interestingly, children with language and reading difficulties and associated temporal processing problems also often exhibit impairment in speech sound discrimination, especially VOT contrast [58].

We have shown electrophysiologically in this study that the VOT of stop CV syllables induced a temporal cortical response that systematically varied in N1 latency in a manner related to the time at which stimulus voicing began (see Figure 1D). The implication of these results is important in measuring these four CV syllables /da/, /ta/ and /ba/, /pa/ that differ in voicing onset electrophysiologically using the CAEP in infants and young children who are suspected to have temporal processing disorders. This type of assessment may perhaps assist in early identification and intervention of temporal processing disruption.

Speech-in-noise

There was a clear effect of SNRs on N1 latency in this study, to the extent that, as the noise level increased, the latency of N1 was significantly prolonged. These results suggest a delay in the cortical neurons to detect the onset of the speech stimulus /da/ with increasing noise level in normally hearing adult participants. With the most difficult listening condition, -20 dB SNR, there was no identifiable N1 waveform for any participant. This finding highlights the possibility that in situations at -20 dB SNR in normal communication, speech intelligibility will be significantly deteriorated in normally hearing adult listeners.

Individuals with ANSD typically complain of an impaired ability to understand speech especially in the presence of background noise [23,59]. The auditory processes that contribute to the speech perceptual deficit in background noise appear to be related to the abnormal temporal processing function in ANSD [26,57]. Cunningham et al. [59] have shown that some individuals with ANSD in their study who have speech perception scores of 90% in quiet had their speech perception scores reduced to 40% when tested at 10 dB SNR and their speech perception scores showed a further marked drop down to 5% at 0 dB SNR. Kraus et al. [20] presented a case study with ANSD, with normal hearing thresholds and speech perception of 100% scores in quiet; however, in the presence of background noise at +3 dB SNR the speech perception scores were 10%. Furthermore, many studies have shown that children diagnosed with language-based learning disabilities, such as dyslexia and specific language impairment, exhibit distortion of the timing of cortical responses when acoustic signals are presented in noise [60,61].

The implication of these results is important for a population with SNR problems, such as ANSD, and for those with temporal processing deficit. Further studies examining speech signal-to-noise encoding in these populations are necessary to understand how listening is impaired by changing temporal information at the level of the cortex. This research may assist in early identification of and intervention for temporal processing disruption in infants, young children and those adults whose auditory temporal processing abilities may be difficult to assess by behavioural measures.

Amplitude-modulated broadband noise

This study of young normally hearing adults found that the N1 peak of the CAEP could be elicited to different modulation depths (100%, 75%, 50%, 25% and 0%). This finding reflects the ability of cortical neurons to detect overall amplitude changes (temporal) within the acoustic signal objectively, even the smallest changes, such as 25% AM. That is, the temporal modulation information within the acoustic stimuli was represented by the N1 component at the level of the auditory cortex. These small changes within the acoustic stimuli are important for speech intelligibility, since human speech consists of time varying signals and the information contained in the dynamic temporal structure is crucial for speech identification and communication. Individuals s with poor temporal processing ability have particular difficulty in detecting these small changes in the amplitude of the acoustic signal [22,26,57]. This difficulty consequently affects their speech intelligibility, which is further worsened in the presence of background noise.

Although the results reported here indicate a slight shift in N1 latency when the modulation depths changed in both conditions, this effect was not significant. In the first condition (AM-BBN 300 ms), we assume that the reason behind our not observing significant changes in N1 latency in response to changes in amplitude modulation depths was that the CAEP waveform is dominated by the onset and thus reflects characteristics of the stimulus onset time [11,47,54]. That is, the onset time for all modulation depths (non-stimuli) was 0 ms but the amplitude depths varied; therefore, the cortical neurons were less sensitive to the modulation depths in this condition, and so we were unable to observe significant changes in N1 latency.

For the second condition, un-modulated BBN (600 ms) + AM (300 ms), we assume that the reason for not observing systematic and significant changes in N1 latency in response to varying amplitude modulation depths was that the first N1 latency corresponds to the onset of the un-modulated BBN (600 ms), which is a change from silence to sound (an onset response). Furthermore, the second N1 latency corresponds to the onset of the changing from the unmodulated BBN to AMBBN, which is part of the ongoing stimuli “acoustic changes complex” (ACC), and so the second part reflects the acoustic changes in the stimuli [40]. The ACC has been reliably recorded in our participants and our data confirm that it is possible to detect N1 latency in response to modulation depths of ongoing stimuli. Even though it is possible to detect N1 latency of the ACC responses to AM-BBN part, the response is considerably smaller than that observed to the un-modulated BBN (600 ms). We assume here that the cortical regions activated by the two stimuli (un-modulated + modulated AMBBN) are not entirely separated, but overlap. In the overlapping region, the “line-busy effect” hypothesis of Stevens and Davis [61] would explain the reduction in overall amplitude of the AMBBN (ACC), because the cortical neurons were already activated (firing) by the unmodulated BBN (onset response) and interfere with synchronous responses to AMBBN (ACC). This overlap would result in an overall decrease in the numbers of cortical neurons that respond synchronously to the AM-BBN [63-82]. Therefore fewer cortical neurons firing to the second part ACC of the ongoing stimuli would evoke smaller responses of the N1 component, which is significantly smaller than that for onset responses of un-modulated BBN. Consequently, we would not observe any significant effect in N1 latency in response to the modulation depths for the ACC.

A second possibility is that the cortical neurons activated by the two stimuli (un-modulated BBN + AM-BBN) are separate; that is, the ACC is a response to a “new sound” which occurred because of the gap between the two stimuli. The ACC in that case is an onset response rather than an ongoing AM-BBN sound, and perhaps there are different populations of cortical neurons firing to that “new sound”.

This N1 latency of acoustic change complex and the behavioural detection of the AM-BBN we obtained can indicate presence or absence of the person’s sensitivity of the AM-BBN detection ability of the ongoing stimuli. Although we did not observe any significant effect on N1 latency, further study of N1 amplitude and other CAEP components, such as P1 and P2 and N2 components, might elucidate whether different generators could be activated in response to the amplitude modulated signal. Clearly, however, much additional work will be needed to establish the clinical utility of this measure.

Conclusion

The results from the present study suggest that N1 latency can be used as an objective testing of temporal processing ability in participants with a temporal processing disruption who are particularly challenged by the presence of background noise and who are difficult to assess by behavioural response. However, further work needs to be done with a larger population with normal hearing and with a temporally disordered population to clinically validate these tests. The present study represents the first publication of normative data for adults responding to one of our electrophysiological temporal processing measures (AM-BBN). However, as this the first time we have measured the AM paradigm and the aim was to investigate whether N1 latency could be elicited in response to different AM depths in young adults, further investigation is necessary to understand the relation between N1 amplitude and AM.

References

- Musiek F (2003) Temporal processing: The basics. Hearing Journal 56: 52.

- Farmer M, Klein R (1995) The evidence for a temporal processing deficit linked to dyslexia: A review. Psychonomic Bulletin & Review 2: 460-493.

- Tallal P, Miller SL, Bedi G, Byma G, Wang X, et al. (1996) Language comprehension in language-learning impaired children improved with acoustically modified speech.Science 271: 81-84.

- Wright BA, Lombardino LJ, King WM, Puranik CS, Leonard CM, et al. (1997) Deficits in auditory temporal and spectral resolution in language-impaired children.Nature 387: 176-178.

- Tallal P, Miller S, Fitch RH (1993) Neurobiological basis of speech: A case for the preeminence of temporal processing. Annals of the New York Academy of Sciences 682: 27-47.

- Michalewski HJ, Starr A, Zeng FG, Dimitrijevic A (2009) N100 cortical potentials accompanying disrupted auditory nerve activity in auditory neuropathy (AN): Effects of signal intensity and continuous noise. Clinical Neurophysiology 120: 1352-1363.

- Michalewski HJ, Starr A, Nguyen T, Kong Y, Zeng F, et al. (2005) Auditory temporal processing in normal-hearing individuals and patients with auditory neuropathy. Clinical Neurophysiology 116: 669-680.

- Roman S, Canevet G, Lorenzi C, Triglia J, Liegeois-Chauvel C (2003) Voice onset time encoding in patients with left and right cochlear implants. Neuroreport 15: 601-605.

- Warrier C, Johnson K, Hayes E, Nicol T, Kraus N (2004) Learning impaired children exhibit timing deficits and training-related improvements in auditory cortical responses to speech in noise. Experimental Brain Research 157: 431-441

- Giraud AL, Lorenzi C, Ashburner J, Wable J, Johnsrude I, et al. (2000) Representation of the temporal envelope of sounds in the human brain.J Neurophysiol 84: 1588-1598.

- Steinschneider M, Volvov I, Noh M, GarellC, Howard M (1999) Temporal encoding of the voice onset time phonetic parameter by field potentials recorded directly from human auditory cortex. Journal of Neurophysiology 82: 2346-2357.

- Lisker L, Abramson A (1964) A cross-language study of voicing in initial stops: Acoustical measurements. Word 20: 384-422.

- Sharma A, Dorman MF (1999) Cortical auditory evoked potential correlates of categorical perception of voice-onset time.J AcoustSoc Am 106: 1078-1083.

- Phillips DP, Farmer ME (1990) Acquired word deafness, and the temporal grain of sound representation in the primary auditory cortex. Behavioural Brain Research 40: 85-94.

- Phillips DP, Hall SE (1986) Spike-rate intensity functions of cat cortical neurons studied with combined tone-noise stimuli.J AcoustSoc Am 80: 177-187.

- Billings CJ, Tremblay KL, Stecker C, Tolin W (2009) Human evoked cortical activity to signal-to-noise ratio and absolute signal level. Hear Res 254: 15-24.

- Rosen S (1992) Temporal information in speech: Acoustic, auditory and linguistic aspects. Philosophical Transactions: Biological Sciences 336: 367-373.

- Houtgast T, Steeneken HJ (1985) A review of the MTF concept in room acoustics and its use for estimating speech intelligibility in auditoria. Journal of the Acoustical Society of America 77: 1069-1077.

- Drullman R, Festen JM, Plomp R (1994) Effect of temporal envelope smearing on speech reception.J AcoustSoc Am 95: 1053-1064.

- Kraus N, Bradlow AR, Cheatham MA, Cunningham J, King CD, et al. (2000) Consequences of neural asynchrony: A case of auditory neuropathy.J Assoc Res Otolaryngol 1: 33-45.

- Kumar AU, Jayaram M (2005) Auditory processing in individuals with auditory neuropathy.Behav Brain Funct 1: 21.

- Rance G, McKay C, Grayden D (2004) Perceptual characterization of children with auditory neuropathy.Ear Hear 25: 34-46.

- Rance G, Barker E, Mok M, Dowell R, Rincon A, et al. (2007) Speech perception in noise for children with auditory neuropathy/dys-synchrony type hearing loss.Ear Hear 28: 351-360.

- Narne VK, Vanaja CS (2008) Effect of envelope enhancement on speech perception in individuals with auditory neuropathy.Ear Hear 29: 45-53.

- Narne VK, Vanaja C (2008) Speech identification and cortical potentials in individuals with auditory neuropathy.Behav Brain Funct 4: 15.

- Zeng FG, Oba S, Garde S, Sininger Y, Starr A (1999) Temporal and speech processing deficits in auditory neuropathy.Neuroreport 10: 3429-3435.

- Lorenzi C, Dumont A, Füllgrabe C (2000) Use of temporal envelope cues by children with developmental dyslexia. Journal of Speech Language and Hearing Research 43: 1367-1379.

- Menell P, McAnally K, Stein J (1999) Psychophysical sensitivity and physiological response to amplitude modulation in adult dyslexic listeners. Journal of Speech, Language, and Hearing Research 42: 797-803.

- Rocheron I, Lorenzi C, Füllgrabe C, Dumont A (2002) Temporal envelope perception in dyslexic children.Neuroreport 13: 1683-1687.

- Witton C, Talcott JB, Hansen PC, Richardson AJ, Griffiths TD, et al. (1998) Sensitivity to dynamic auditory and visual stimuli predicts nonword reading ability in both dyslexic and normal readers. Current Biology 8: 1791-1797.

- Hall III, WJ (1990) Handbook of audiotryeveoked responses. Massachusetts: Allyn and Bacon.

- Van Wassenhove V, Grant KW, Poeppel D (2003) Electrophysiology of auditory-visual speech integration. Auditory Visual Speech Processing. St. Jorioz, France.

- Eggermont J (2007) Electric and magnetic fields of synchronous neural activity. In: Burkard RM, Don M, EggermontJ (eds.) Auditory evoked potentials,Philadephia: Lippincott Williams & Wilkins.

- Näätänen R, Picton T (1987) The N1 wave of the Human electric and magnetic responses to sound: A review and an analysis of the component structure. Psychophysiology 24: 375-425.

- Wolpaw JR, Penry JK (1975) A temporal component of the auditory evoked response.ElectroencephalogrClinNeurophysiol 39: 609-620.

- Whiting KA, Martin BA, Stapells DR (1998) The effects of broadband noise masking on cortical event-related potentials to speech sounds /ba/ and /da/.Ear Hear 19: 218-231.

- Kaukoranta E, Hari R, Lounasmaa OV (1987) Responses of the human auditory cortex to vowel onset after fricative consonants.Exp Brain Res 69: 19-23.

- Martin BA, Boothroyd A (1999) Cortical, auditory, event-related potentials in response to periodic and aperiodic stimuli with the same spectral envelope.Ear Hear 20: 33-44.

- Tremblay KL, Piskosz M, Souza P (2003) Effects of age and age-related hearing loss on the neural representation of speech cues.ClinNeurophysiol 114: 1332-1343.

- Martin BA, Boothroyd A (2000) Cortical, auditory, evoked potentials in response to changes of spectrum and amplitude.J AcoustSoc Am 107: 2155-2161.

- Onishi S, Davis H (1968) Effects of duration and rise time of tone bursts on evoked V potentials.J AcoustSoc Am 44: 582-591.

- Rapin I, Schimmel H, Tourk LM, Krasnegor NA, Pollak C (1966) Evoked responses to clicks and tones of varying intensity in waking adults.ElectroencephalogrClinNeurophysiol 21: 335-344.

- Kaplan-Neeman R, Kishon-Rabin L, Henkin Y, Muchnik C (2006) Identification of syllables in noise: electrophysiological and behavioral correlates.J AcoustSoc Am 120: 926-933.

- Cahart R, Jerger J (1959) Preferred method for clinical determination of pure tone thresholds. Journal of Speech and Hearing Disorders 24: 330-345.

- Viemeister NF (1979) Temporal modulation transfer functions based upon modulation thresholds.J AcoustSoc Am 66: 1364-1380.

- Levitt H (1971) Transformed up-down methods in psychoacoustics.J AcoustSoc Am 49: Suppl 2:467+.

- Giraud K, Demonet J, Habib M, Chauvel P, Liegeois-Chauvel C (2005) Auditory evoked potential patterns to voiced and voiceless speech sounds in adult developmental dyslexics with persistent deficits. Cerebral Cortex 15: 1524-1534.

- Sharma A, Dorman MF, Spahr AJ (2002) A sensitive period for the development of the central auditory system in children with cochlear implants: Implications for age of implantation.Ear Hear 23: 532-539.

- Sharma A, Kraus N, McGee T, Nicol T (1997) Developmental changes in P1 and N1 central auditory responses elicited by consonant-vowel syllables. Clinical Neuropathysiolgy 104: 540-545.

- Sharma A, Marsh CM, Dorman MF (2000) Relationship between N1 evoked potential morphology and the perception of voicing.J AcoustSoc Am 108: 3030-3035.

- Sharma A, Dorman M, Kral A (2005) The influence of a sensitive period on central auditory development in children with unilateral and bilateral cochlear implants. Hearing Research 203: 134-143.

- Picton TW, Alain C, Otten L, Ritter W, Achim A (2000) Mismatch negativity: Different water in the same river.AudiolNeurootol 5: 111-139.

- Gilley PM, Sharma A, Dorman M, Martin K (2005) Developmental changes in refractoriness of the cortical auditory evoked potential. Clinical Neuropysiology 116: Â 648-657.

- Eggermont JJ (2001) Between sound and perception: Reviewing the search for a neural code.Hear Res 157: 1-42.

- Summerfield Q, Haggard M (1977) On the dissociation of spectral and temporal cues to the voicing distinction in initial stop consonants.J AcoustSoc Am 62: 435-448.

- Pickett J (1999) The acoustics of speech communication. MA: Allyn& Bacon.

- Zeng FG, Kong YY, Michalewski HJ, Starr A (2005) Perceptual consequences of disrupted auditory nerve activity.J Neurophysiol 93: 3050-3063.

- Hornickel J, Skoe E, Nicol T, Zecker S, Kraus N (2009) Subcortical differentiation of stop consonants relates to reading and speech-in-noise perception.ProcNatlAcadSci U S A 106: 13022-13027.

- Cunningham J, Nicol T, Zecker S, Bradlow A, Kraus N (2001)Neurobiologic responses to speech in noise in children with learning problems: Deficits and strategies for improvement. Clinical Neurophysiology 112: 758-767.

- Wible B, Nicol T, Kraus N (2002) Abnormal neural encoding of repeated speech stimuli in noise in children with learning problems.ClinNeurophysiol 113: 485-494.

- Stevens S, Davis H (1938) Hearing: Its psychology and physiology. New York: Wiley.

- Miller MI, Barta PE, Sachs MB (1987) Strategies for the representation of a tone in background noise in the temporal aspects of the discharge patterns of auditory-nerve fibers.J AcoustSoc Am 81: 665-679.

- Phillips DP (1990) Neural representation of sound amplitude in the auditory cortex: effects of noise masking.Behav Brain Res 37: 197-214.

- Schneider BA, Hamstra SJ (1999) Gap detection thresholds as a function of tonal duration for younger and older listeners.J AcoustSoc Am 106: 371-380.

- Zeng FG, Liu S (2006) Speech perception in individuals with auditory neuropathy.J Speech Lang Hear Res 49: 367-380.

- Bacon SP, Viemeister NF (1985) Temporal modulation transfer functions in normal-hearing and hearing-impaired listeners.Audiology 24: 117-134.

- Barnet AB (1975) Auditory evoked potentials during sleep in normal children from ten days to three years of age.ElectroencephalogrClinNeurophysiol 39: 29-41.

- Berlin CI, Bordelon J, Hurley A (1997) Autoimmune inner ear disease: Basic science and audiological issues. In: Berlin CI (eds.) Neurotransmission and Hearing Loss: Basic Science, Diagnosis and Management pp: 137-146.

- Berlin CI, Bordelon J, St John P, Wilensky D, Hurley A, et al. (1998) Reversing click polarity may uncover auditory neuropathy in infants.Ear Hear 19: 37-47.

- Berlin C, Hood L, Cecola P (1993) Dose type I afferent neuron dysfunction reveal itself through lack of efferent suppression? Hearing Research 65: 40-54.

- Berlin C, Hood L, Goforth-Barter L, Bordelon J (1999) Clinical application of auditory efferent studies. In: Berlin CI (eds) The efferent auditory system: Basic sciences and clinical applications. Singular Publishing Group, San Diego.

- Berlin C, Hood L, Hurley A, Wen H (1994) Contralateral suppression of otoacoustic emissions: An index of the function of the medial olivocochlear system. Archives of Otolaryngology – Head and Neck Surgery 110: 3-21.

- Berlin C, Hood L, Hurley A, Wen H (1996) Hearing aids: Only for hearing impaired patients with abnormal otoacoustic emissions. In: Berlin C (eds) Hair cells and hearing aids. Singular Publishing Group, San Diego.

- Berlin C, Hood L, Morlet T, Rose K, Brashears S (2003). Auditory neuropathy/dys-synchrony: Diagnosis and management. Mental Retardation and Developmental Disabilities Research Reviews 9: 225-231.

- Berlin C, Hood L, Rose K (2001) On renaming auditory neuropathy as auditory dys-synchrony. Audiology Today 13: 15-22.

- Berlin CI, Morlet T, Hood LJ (2003) Auditory neuropathy/dyssynchrony: its diagnosis and management.PediatrClin North Am 50: 331-340, vii-viii.

- Billings CJ, Tremblay KL, Souza PE, Binns MA (2007) Effects of hearing aid amplification and stimulus intensity on cortical auditory evoked potentials. Audiology and Neuro-Otology 12: 234-246.

- Bishop DV (1997) Uncommon understanding: Development and disorders of language comprehension in children. Hove: Psychology press.

- Bishop DV, Carlyon R, Deeks J, Bishop S (1999) Auditory temporal processing impairment: Neither necessary nor sufficient for causing language impairment in children. Journal of Speech, Language, and Hearing Research 42: 1295-1310.

- Bradlow AR, Kraus N, Hayes E (2003) Speaking clearly for children with learning disabilities: Sentence perception in noise. Journal of Speech Language and Hearing Research 46: 80-97.

- Drullman R, Festen JM, Plomp R (1994) Effect of reducing slow temporal modulations on speech reception.J AcoustSoc Am 95: 2670-2680.

- Mason JC, De Michele A, Stevens C, Ruth RA, Hashisaki GT (2003) Cochlear implantation in patients with auditory neuropathy of varied etiologies.Laryngoscope 113: 45-49.

Citation: Almeqbel A, McMahon C (2016) Auditory Cortical Temporal Processing Abilities in Young Adults. Otolaryngol (Sunnyvale) 6:238. DOI: 10.4172/2161-119X.1000238

Copyright: © 2016 Almeqbe A, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Share This Article

Recommended Journals

Open Access Journals

Article Tools

Article Usage

- Total views: 11639

- [From(publication date): 6-2016 - Apr 06, 2025]

- Breakdown by view type

- HTML page views: 10766

- PDF downloads: 873